General

How AI is Making Data Science Accessible to All Employees

The rapid integration of generative artificial intelligence into everyday business workflows is fundamentally reshaping how organizations interact with data. What was once the exclusive domain of trained analysts and data scientists is now becoming accessible to employees across departments, regardless of their technical background. By 2026, the presence of generative AI within enterprises means that the ability to extract meaning from data is no longer constrained by knowledge of complex tools, query languages, or specialized dashboards. Instead, insight can emerge through simple conversation, allowing people to ask questions in plain language and receive meaningful, context-aware answers. This shift has profound implications for productivity, decision-making, and organizational culture, but it also introduces new responsibilities around education, governance, and safety.

For decades, organizations invested heavily in data infrastructure while simultaneously struggling to ensure that the information stored in those systems actually informed everyday decisions. Data warehouses grew larger, dashboards became more sophisticated, and analytics teams expanded, yet many employees still felt disconnected from the insights supposedly available to them. The barrier was not the absence of data, but the expertise required to access and interpret it. Learning SQL, mastering business intelligence tools, and understanding statistical concepts represented a steep learning curve for people whose primary roles lay elsewhere. Generative AI changes this dynamic by acting as an intelligent intermediary between humans and data systems.

With generative AI embedded into analytics platforms, enterprise software, and even internal chat tools, employees can interact with data in the same way they communicate with colleagues. A sales manager can ask why revenue dipped in a particular region last quarter and receive an explanation supported by trends, comparisons, and relevant context. A human resources professional can explore patterns in employee retention without constructing queries or navigating complex filters. An operations lead can investigate supply chain delays by asking follow-up questions in natural language, refining the inquiry as new information emerges. In this environment, the act of analysis becomes conversational rather than technical.

This transformation effectively democratizes data science. While it does not eliminate the need for professional data scientists, it expands analytical capability across the organization. Employees become empowered to test assumptions, validate ideas, and explore scenarios on their own. Decision-making accelerates because fewer requests need to funnel through centralized analytics teams. Insights surface closer to where decisions are made, reducing delays and misunderstandings. Over time, this leads to a culture in which curiosity about data becomes normal rather than exceptional.

However, the idea that everyone can become "a data scientist" through generative AI does not imply that expertise is irrelevant. On the contrary, it elevates the importance of foundational understanding. When people rely on conversational interfaces to explore data, they still need to grasp what the data represents, how it was collected, and what its limitations are. Without this baseline literacy, there is a risk of misinterpreting results or placing undue confidence in outputs that appear authoritative but may be incomplete or biased. Generative AI can lower the barrier to access, but it cannot replace critical thinking.

As a result, one of the central priorities for enterprises in 2026 is building and maintaining data literacy across the workforce. Data literacy in this context goes beyond the ability to read charts or understand basic metrics. It encompasses an understanding of data quality, context, uncertainty, and ethical use. Employees need to recognize the difference between correlation and causation, understand how time frames and sample sizes influence conclusions, and be aware of how biases can enter datasets. When generative AI presents an insight, users should be equipped to ask whether it makes sense and what additional information might be needed before acting on it.

Training programs are therefore evolving. Instead of focusing solely on how to use specific tools, organizations are emphasizing how to think about data. Workshops, internal courses, and ongoing learning initiatives aim to help employees frame better questions, interpret answers responsibly, and understand the assumptions underlying AI-generated insights. This kind of education is role-specific, tailored to how different teams use data in their daily work. A marketing professional’s data literacy needs differ from those of a finance analyst, yet both benefit from a shared foundation.

Alongside education, safety and governance have become critical considerations. Generative AI systems often have access to sensitive enterprise data, including financial information, customer records, and proprietary operational details. Allowing widespread access without clear rules introduces risks related to privacy, compliance, and intellectual property. In 2026, responsible organizations recognize that empowering employees with AI-driven analytics must be accompanied by robust usage policies that are clearly defined and consistently enforced.

These policies address questions such as what data can be accessed through generative AI tools, how outputs can be shared, and which decisions require additional human review. They clarify boundaries around sensitive information, ensuring that employees do not inadvertently expose confidential data or violate regulatory requirements. Importantly, these guidelines are not hidden in legal documents that few people read. They are communicated in practical terms, integrated into training, and reinforced through the design of the tools themselves.

Trust plays a central role in the successful adoption of generative AI for data analysis. Employees must trust that the system is providing accurate, unbiased information, while leaders must trust that employees are using the tools responsibly. Transparency helps bridge this gap. When AI systems explain how conclusions are reached, reference underlying data sources, and indicate levels of confidence or uncertainty, users are better equipped to make informed judgments. This transparency also reinforces learning, as employees gain insight into how data-driven reasoning works.

At the organizational level, the widespread use of generative AI reshapes the relationship between centralized data teams and the rest of the business. Data scientists, analysts, and engineers shift from being gatekeepers of insight to enablers of capability. Their role increasingly involves curating high-quality datasets, building reliable models, and defining standards that ensure consistency and accuracy. They also act as educators and advisors, helping other teams understand how to use AI tools effectively and responsibly.

This evolution can relieve pressure on analytics teams, freeing them to focus on more complex problems that require deep expertise. At the same time, it demands close collaboration across functions. Governance frameworks, training materials, and tool configurations are most effective when developed with input from multiple stakeholders, including IT, legal, compliance, and business units. Generative AI becomes not just a technology initiative, but an organizational one.

The benefits of this approach extend beyond efficiency. When employees across an organization feel capable of engaging with data, it fosters a sense of ownership and accountability. Decisions are more likely to be justified with evidence rather than intuition alone. Teams can experiment, learn from outcomes, and adapt more quickly to changing conditions. In competitive markets, this agility can be a significant advantage.

Yet it is important to acknowledge the limitations and risks that accompany generative AI. Models can reflect biases present in training data, misinterpret ambiguous questions, or generate plausible-sounding but incorrect explanations. Overreliance on AI-generated insights without human oversight can lead to errors that scale rapidly across an organization. This is why data literacy and safety are inseparable priorities. Empowerment without understanding can be as dangerous as restricted access.By 2026, leading enterprises recognize that the goal is not to replace human judgment, but to augment it. Generative AI serves as a powerful assistant, handling complexity and surfacing patterns, while humans provide context, values, and responsibility. When employees understand both the capabilities and the limitations of these tools, they can use them to enhance their work rather than blindly accept their outputs.

In this new landscape, success depends on balance. Organizations must invest in intuitive AI-driven systems that make data accessible, while simultaneously investing in people’s ability to think critically about what they see. They must encourage exploration and innovation, while establishing clear boundaries that protect sensitive information and ensure compliance. They must celebrate the idea that anyone can ask meaningful questions of data, while maintaining respect for the expertise required to manage and interpret it at scale.

The notion that everyone in an organization can effectively become a data scientist captures the spirit of this transformation, but it is ultimately a metaphor. What is really emerging is a workforce that is more fluent in data-driven thinking, supported by generative AI that removes unnecessary technical barriers. In 2026, the enterprises that thrive will be those that treat this shift not as a simple technology upgrade, but as an opportunity to redefine how people learn, decide, and collaborate. By prioritizing data literacy, safety, and shared understanding, they can unlock the full potential of generative AI while avoiding the pitfalls that come with its power.

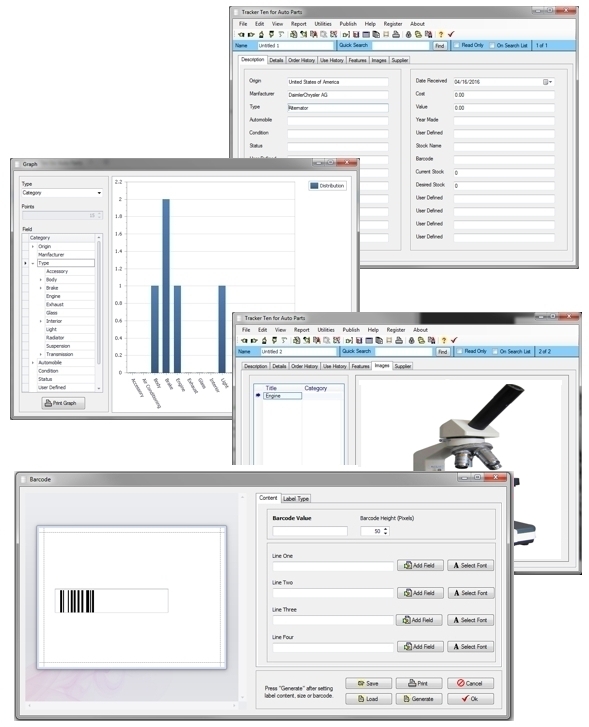

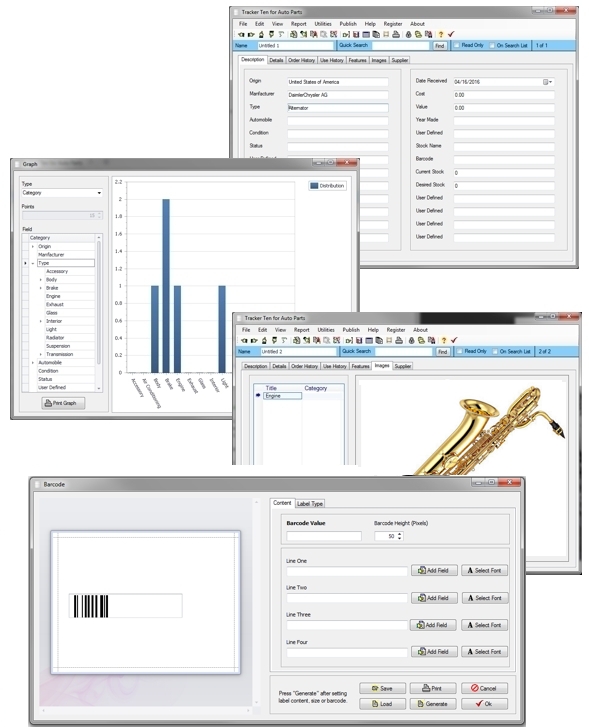

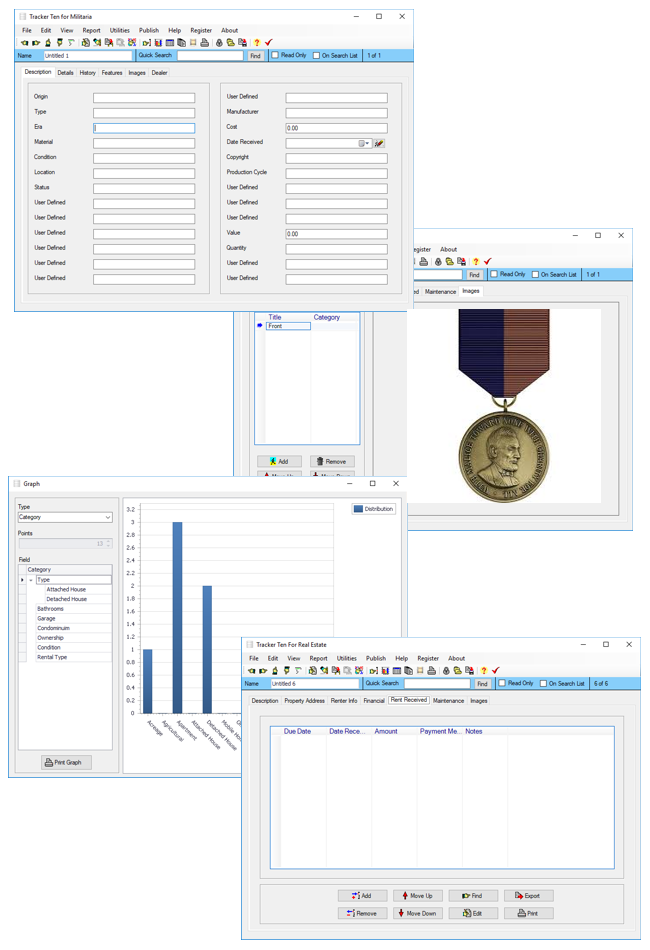

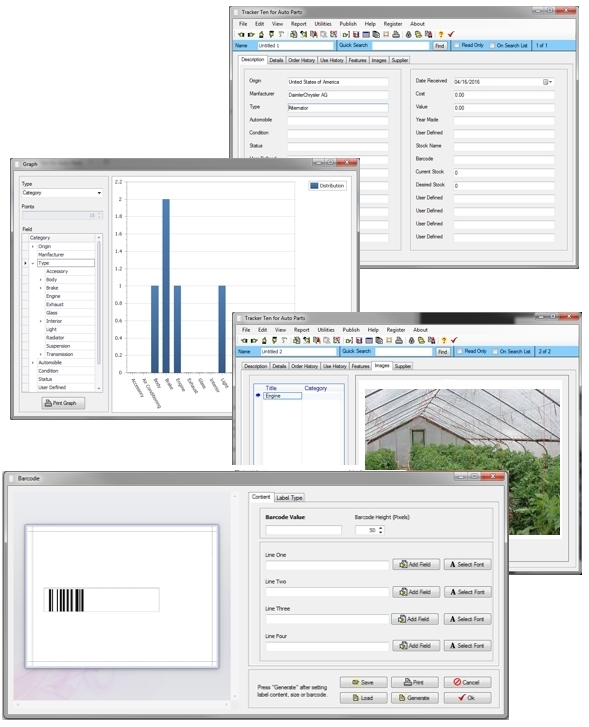

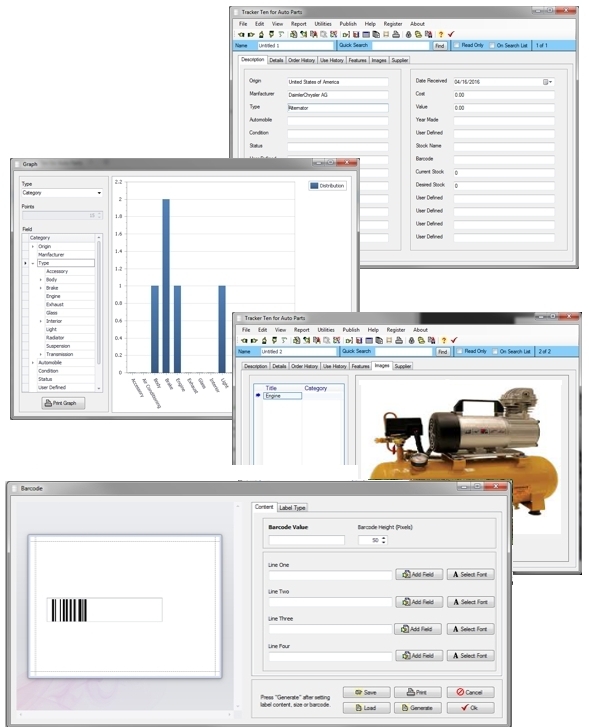

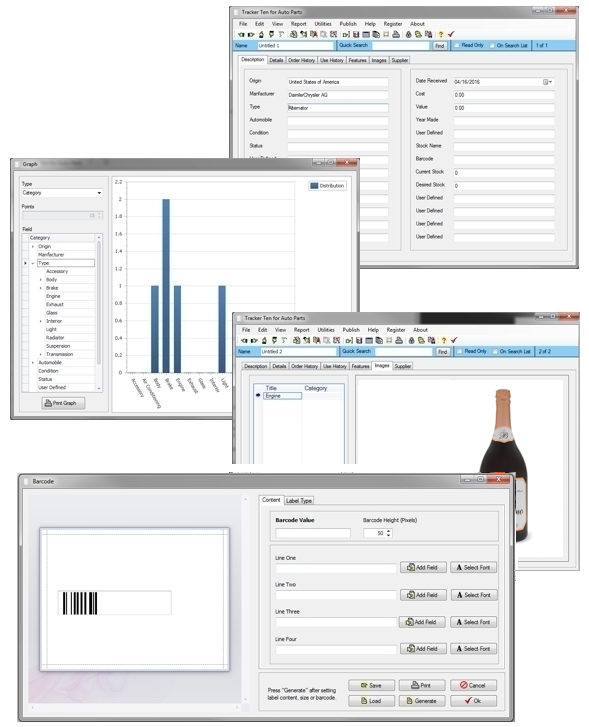

Looking for windows database software? Try Tracker Ten

- PREVIOUS Database Sort Order Saturday, December 27, 2025

- NextMoving From Paper Records to Electronic Tracking Friday, December 19, 2025