Database

Lakehouse Data Architecture

For many people who are new to data and analytics, the number of different systems used to store and analyze information can feel overwhelming. Terms like data lakes, data warehouses, and analytics platforms are often mentioned as if everyone already understands them. To make sense of data lakehouses, it helps to begin with the basic problem they are trying to solve. Organizations collect enormous amounts of data from many sources, and they want that data to be easy to store, affordable to keep, and fast to analyze. Historically, no single system did all of these things well at the same time, which led to separate tools for storage and analysis. Data lakehouses were created to bring these tools together into one simpler, more efficient approach.

A data lake is a system designed to store large amounts of raw data at a low cost. It can hold almost any type of data, including text, images, logs, and structured records. The key advantage of a data lake is flexibility. Data can be stored in its original form without deciding upfront how it will be used. This makes it easy to collect data quickly and cheaply. However, traditional data lakes have limitations. Because the data is often unstructured and loosely managed, it can be difficult to ensure quality, consistency, and reliability when performing analysis.

A data warehouse, on the other hand, is built specifically for analysis. It organizes data into well-defined structures, applies rules to keep it clean, and makes it easy to run reports and queries. Data warehouses are excellent for business intelligence and decision-making because they provide reliable and consistent results. The downside is that they are often more expensive and less flexible. Data usually has to be carefully prepared and transformed before it can be loaded into a warehouse, which can slow things down.

For many years, organizations used both systems together. Raw data would first be stored in a data lake, then selected portions would be cleaned, transformed, and moved into a data warehouse for analysis. While this approach worked, it introduced complexity. Data teams had to manage multiple systems, build pipelines to move data between them, and deal with delays as data traveled from one place to another. This is where the idea of the data lakehouse comes in.

A data lakehouse combines the strengths of data lakes and data warehouses into a single system. It uses the low-cost storage typically associated with data lakes, but adds the structure, reliability, and management features traditionally found in data warehouses. Instead of treating storage and analytics as separate stages, the lakehouse allows both to happen in the same place. For beginners, this can be understood as having one system that is both affordable to store data and powerful enough to analyze it.

The key to making data lakehouses possible is a newer, more open system design. In the past, data warehouses often relied on specialized, proprietary storage systems. Data lakehouses instead build warehouse-like features directly on top of inexpensive storage technologies. This means that the same data files used for long-term storage can also support high-performance queries and analytics. By applying consistent data structures and management rules to data stored in a lake, the lakehouse creates order without sacrificing flexibility.

One of the biggest benefits of this approach is speed. When data lives in multiple systems, teams spend a lot of time moving it around, validating it, and keeping copies in sync. With a data lakehouse, data is available in one place, reducing the need for duplication. Analysts, data scientists, and engineers can access the same data without waiting for it to be copied or transformed elsewhere. This allows teams to move more quickly from raw data to insights.

Another important advantage is having a more complete view of data. In traditional setups, not all data makes it into the data warehouse. Some data remains in the lake because it is unstructured, experimental, or not yet fully understood. This can limit what analysts and data scientists can see. A data lakehouse makes it easier to work with all available data, whether it is fully structured or still in a raw form. This leads to more accurate analysis and better-informed decisions.

For machine learning and data science, access to up-to-date data is especially important. Models trained on outdated or incomplete data may perform poorly in the real world. Because data lakehouses reduce delays between data collection and analysis, teams can train and update models using the freshest information available. This improves the quality of predictions and insights generated by machine learning systems.

Data lakehouses also help simplify collaboration across different roles. In many organizations, business analysts, data scientists, and engineers use different tools and systems. This can create barriers to sharing data and results. A lakehouse provides a shared foundation where different users can work with the same data in ways that suit their needs. Beginners benefit from this because there is less confusion about where data lives and how to access it.

Cost efficiency is another reason data lakehouses are gaining popularity. Storing large amounts of data in traditional warehouses can be expensive. By using low-cost storage for both raw and processed data, lakehouses help control costs while still offering advanced analytical capabilities. This makes it easier for organizations to keep more data for longer periods, which can be valuable for historical analysis and long-term trends.

From a learning perspective, data lakehouses reduce the number of concepts a beginner must master at once. Instead of understanding multiple systems and how they interact, newcomers can focus on a single environment that supports many use cases. This lowers the barrier to entry and makes it easier to experiment, learn, and build confidence with data tools.

Reliability and data quality are also central to the lakehouse design. Traditional data lakes can become disorganized over time, sometimes referred to as "data swamps." Data lakehouses address this by adding rules and controls similar to those found in data warehouses. These controls help ensure that data remains consistent, accurate, and trustworthy, even as it grows in volume and variety.

Another important feature is support for different types of workloads. A data lakehouse can handle reporting, ad-hoc analysis, and machine learning from the same data source. This flexibility means teams do not need to choose between performance and versatility. Beginners can explore different types of analysis without switching systems or learning entirely new tools.

The open nature of many data lakehouse designs also encourages innovation. Open systems are built on widely supported technologies and formats, making it easier to integrate with other tools and platforms. This reduces vendor lock-in and gives organizations more freedom to adapt as their needs change. For learners, this openness often means better documentation, larger communities, and more learning resources.

In practical terms, a data lakehouse helps organizations spend less time managing data infrastructure and more time using data to answer questions. By merging storage and analytics into a single system, it removes many of the bottlenecks that slow down data projects. Teams can focus on exploring data, building models, and generating insights rather than maintaining complex pipelines.

In summary, data lakehouses represent a modern approach to data management that combines the affordability and flexibility of data lakes with the structure and performance of data warehouses. By implementing warehouse-like features directly on low-cost storage, they create a unified system that is easier to manage and faster to use. For novices, the value lies in simplicity and accessibility. With fewer systems to learn and more complete, up-to-date data at their fingertips, data lakehouses make it easier to understand, analyze, and apply data across business analytics, machine learning, and data science projects.

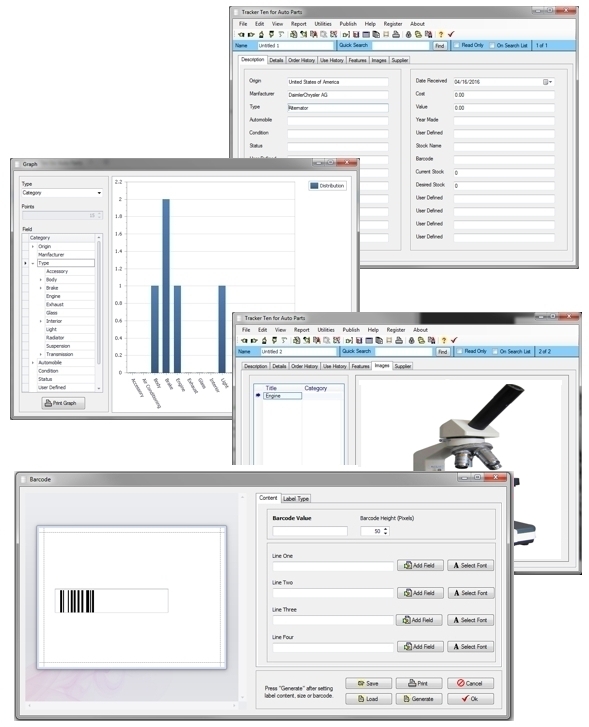

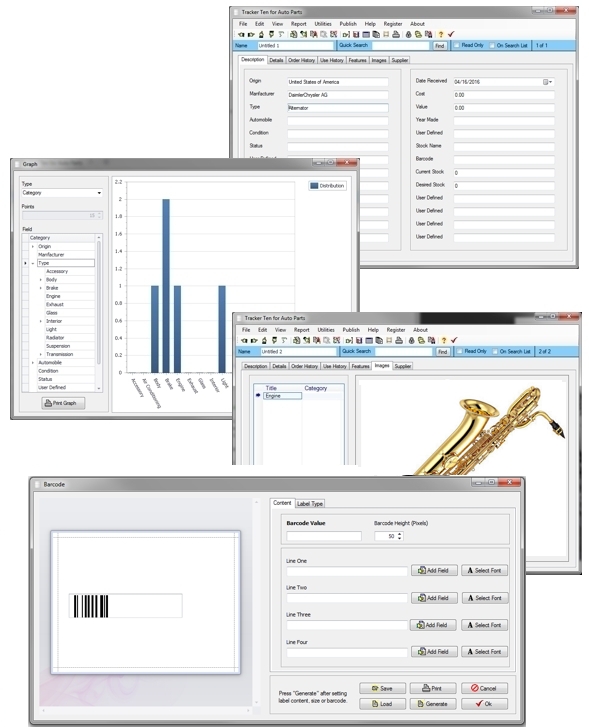

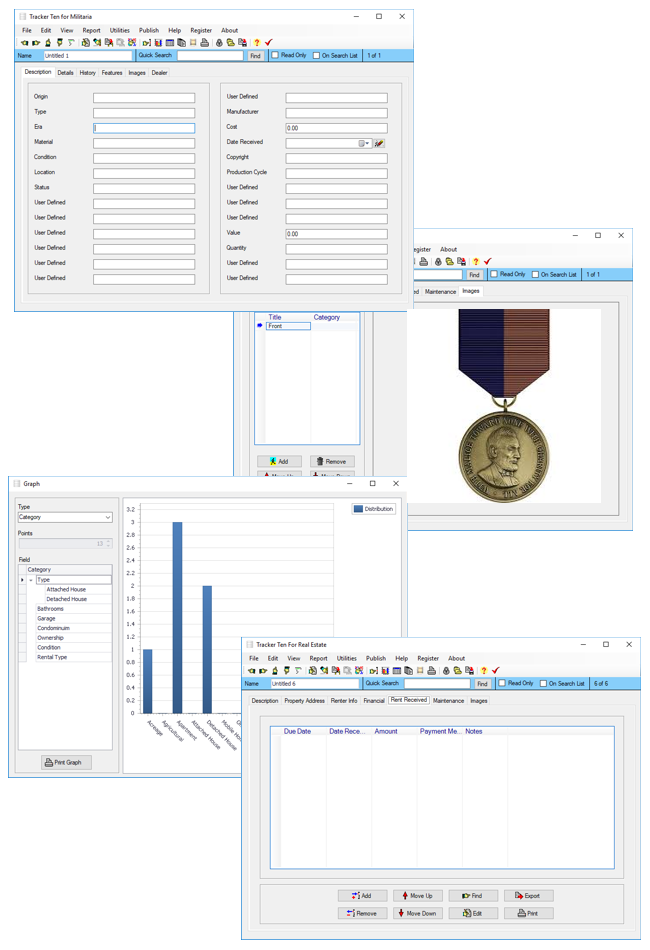

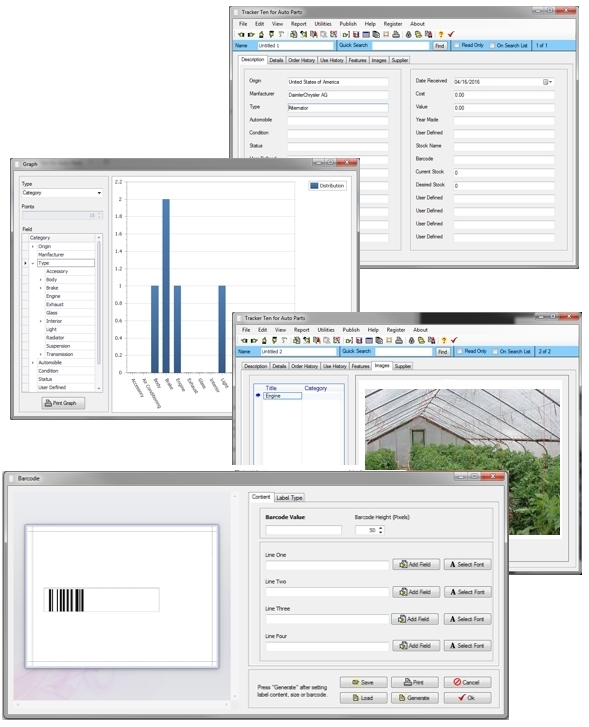

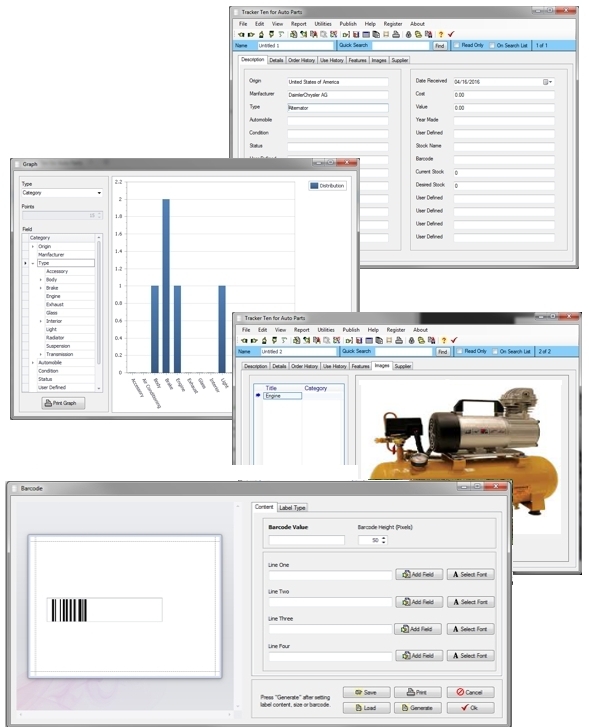

Looking for windows database software? Try Tracker Ten

- PREVIOUS Automotive Fleet Database Thursday, October 2, 2025

- NextWhy Stand Alone Windows Software is Still Relevant Tuesday, September 23, 2025