General

Artificial Intelligence Assisted Data Preparation

For decades, one of the most demanding and least glamorous parts of working with data has been the preparation stage. Before any meaningful analysis could begin, data professionals were required to spend countless hours collecting raw information, correcting errors, standardizing formats, moving data between systems, and ensuring that everything met governance and security requirements. This work formed the backbone of the analytics pipeline, yet it was largely manual, repetitive, and prone to human error. By 2026, this long-standing reality is expected to change dramatically as intelligent systems assume responsibility for much of the data preparation process.

The analytics pipeline has traditionally been a multi-step journey that begins long before insights are generated. Raw data often arrives from a wide variety of sources, including transactional databases, sensors, user activity logs, third-party providers, and legacy systems. Each source typically uses its own formats, naming conventions, and structures. Data engineers and analysts have historically been tasked with reconciling these differences through extract, transform, and load processes. These ETL workflows require careful design, constant maintenance, and frequent adjustments as data sources evolve.

In many organizations, the complexity of ETL pipelines has grown alongside the volume and diversity of data. As new systems are added and business needs change, pipelines must be rewritten or extended, often under tight deadlines. This has led to fragile infrastructures where small changes in upstream data can break downstream processes. Engineers spend significant portions of their time troubleshooting failures, updating schemas, and manually validating results instead of focusing on higher-level architectural or analytical work.

By 2026, advances in intelligent automation are expected to fundamentally reshape this landscape. Rather than relying on hand-coded scripts and rigid workflows, organizations will increasingly deploy smart algorithms that can understand data structures, identify anomalies, and apply transformations autonomously. These systems will be capable of detecting missing values, correcting inconsistencies, normalizing formats, and enriching datasets without direct human intervention. What was once a painstaking manual task will become a largely automated process that adapts as data changes.

One of the most transformative aspects of this shift is the automation of data cleaning. Data quality issues have always been a major obstacle to reliable analytics. Duplicate records, inconsistent naming, incorrect values, and incomplete fields can undermine confidence in results and slow decision-making. Intelligent algorithms can now recognize common patterns of error and apply corrective actions at scale. By learning from historical corrections and domain rules, these systems can continuously improve their accuracy, reducing the need for manual oversight.

Formatting and standardization are also prime candidates for automation. In traditional pipelines, engineers must explicitly define how dates, currencies, units, and categorical values should be represented. In a future driven by smart systems, these transformations can be inferred automatically based on context and usage. For example, algorithms can recognize that multiple fields represent dates in different formats and unify them into a standard representation. This not only saves time but also ensures consistency across datasets and analytical outputs.

ETL workflows themselves are undergoing a fundamental reimagining. Instead of static pipelines built through configuration files or code, intelligent platforms can dynamically orchestrate data movement based on high-level objectives. These systems can determine when data should be extracted, how it should be transformed, and where it should be loaded, all while optimizing for performance, cost, and reliability. They can also monitor pipelines in real time, detect failures, and apply corrective actions without human intervention.

Security and compliance, traditionally layered onto pipelines as separate concerns, are also becoming integral parts of automated data workflows. By 2026, smart systems will be capable of performing security audits autonomously, verifying that data handling processes comply with organizational policies and regulatory requirements. This includes checking access controls, ensuring sensitive fields are properly protected, and validating that data flows adhere to defined boundaries. Automated auditing reduces the risk of oversight and helps organizations maintain compliance in increasingly complex environments.

Perhaps the most striking change on the horizon is the way data engineers interact with these systems. Instead of writing extensive code or configuring complex tools, engineers will increasingly define pipelines using natural language. By describing desired outcomes in plain terms, such as "combine customer transaction data with marketing interactions and refresh it daily," professionals can delegate the implementation details to intelligent platforms. These systems translate human intent into executable workflows, dramatically lowering the barrier to building and modifying pipelines.

This shift does not eliminate the role of data engineers; rather, it elevates it. Engineers move away from repetitive implementation tasks and focus more on defining standards, setting policies, and ensuring that automated systems align with business objectives. Their expertise becomes critical in guiding how intelligent tools interpret requirements and in validating that outputs meet expectations. The emphasis shifts from "how" pipelines are built to "what" they should achieve.

The impact of this transformation is faster insight generation. When data preparation becomes automated and adaptive, the time between asking a question and receiving an answer shrinks dramatically. Analysts and decision-makers no longer wait days or weeks for data to be cleaned and prepared. Instead, they can access high-quality datasets almost immediately, enabling more responsive and informed decisions. This agility is especially valuable in fast-moving industries where timing can be a competitive advantage.

Reduced friction between ideas and execution is another major benefit. In traditional environments, even simple analytical requests can be delayed by the need to modify pipelines or resolve data issues. With intelligent automation and natural language interfaces, new ideas can be tested quickly and iterated upon easily. This encourages experimentation and innovation, as teams are no longer constrained by the overhead of manual data engineering work.

However, the transition to automated analytics pipelines also introduces new challenges. Trust in automated systems becomes a critical concern. Organizations must be confident that smart algorithms are applying correct transformations and that errors are detected before they propagate. This requires transparency into how decisions are made and robust monitoring mechanisms. Human oversight remains essential, particularly for high-stakes data used in strategic decision-making.

There are also cultural implications to consider. As automation takes over tasks that were once core responsibilities, roles and skill sets must evolve. Data professionals will need to develop a deeper understanding of system behavior, governance, and cross-functional communication. Training and change management become key components of a successful transition, ensuring that teams can effectively collaborate with intelligent systems rather than resist them.

By 2026, the analytics pipeline is expected to look very different from its traditional form. What was once a bottleneck defined by manual effort and technical complexity will increasingly become a streamlined, adaptive process driven by smart automation. Data cleaning, formatting, ETL orchestration, and security checks will operate largely in the background, guided by high-level intent rather than detailed instructions.

The result is an analytics ecosystem that moves at the speed of thought. Ideas can be translated into action quickly, insights are generated faster, and organizations can respond more effectively to change. While challenges remain, the convergence of intelligent algorithms and natural language interfaces marks a pivotal moment in the evolution of data work. By reducing friction and freeing human expertise for higher-value activities, this transformation has the potential to redefine how organizations turn data into understanding and action.

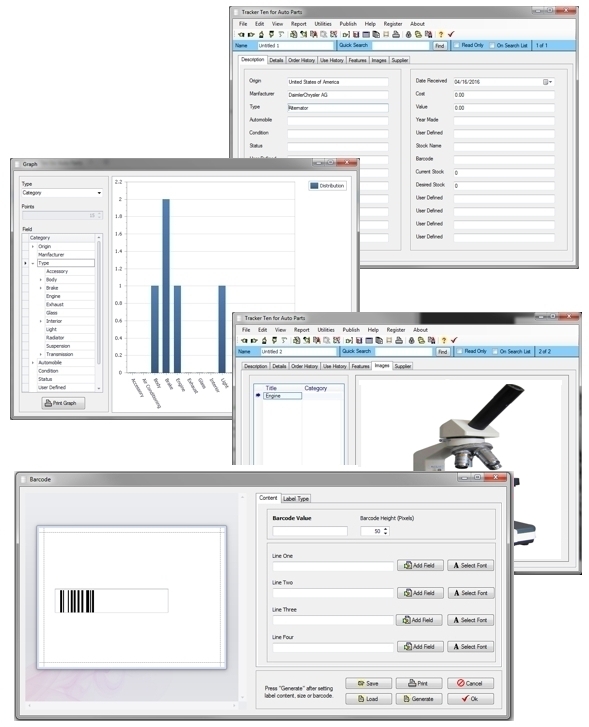

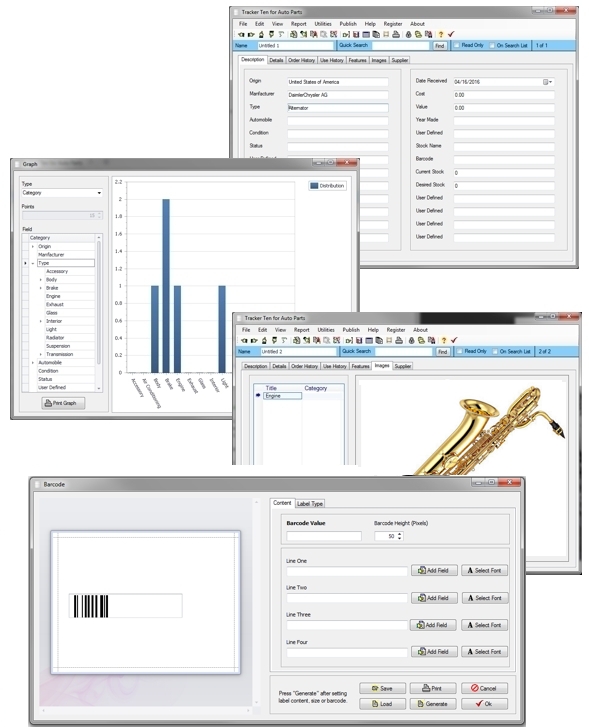

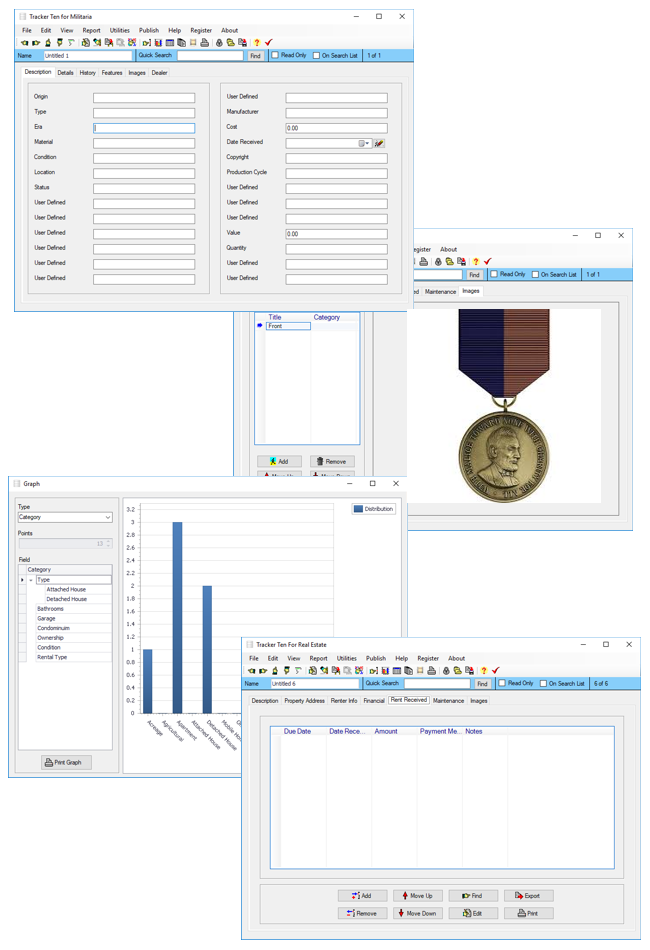

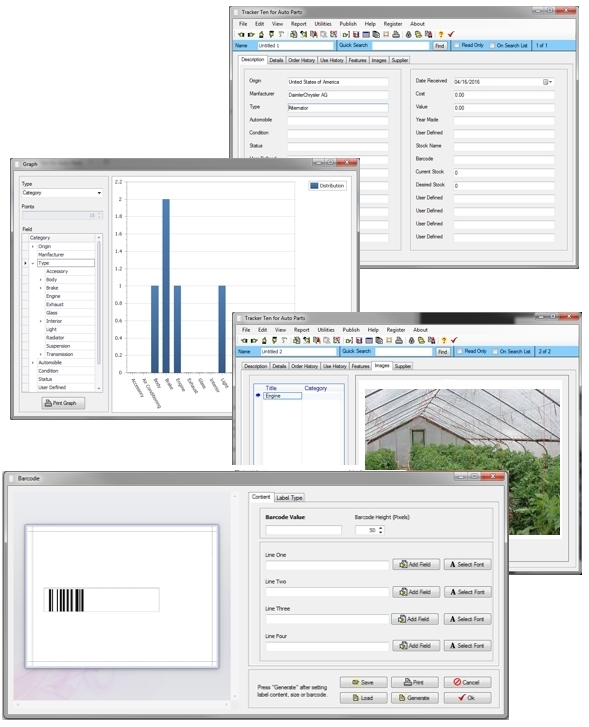

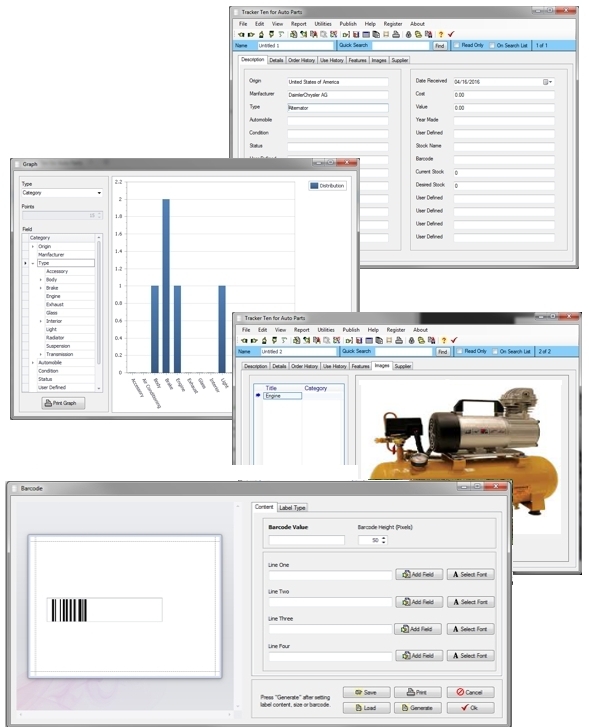

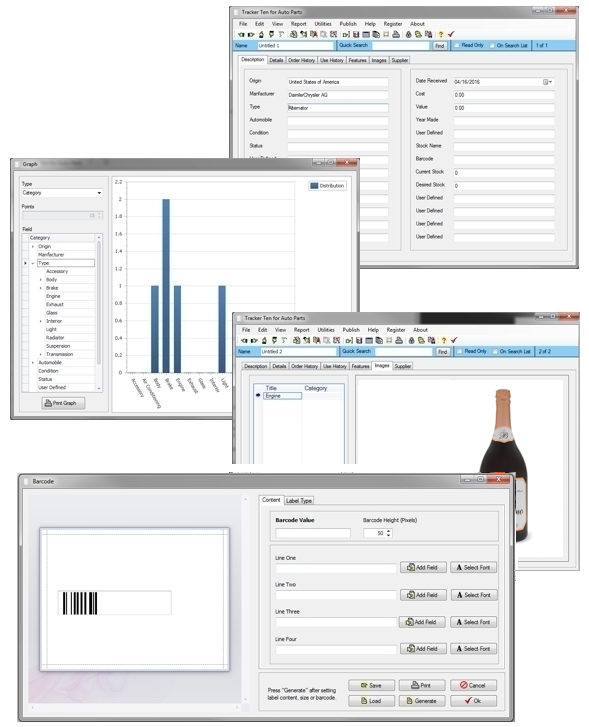

Looking for windows database software? Try Tracker Ten

- PREVIOUS Integrating with the NOAA Weather Data API Using Python Monday, November 24, 2025

- NextTracking Your Art Collection in a Database Wednesday, November 5, 2025