Database

Cleaning Data

Cleaning data is the essential process of identifying and correcting or removing errors, inconsistencies, and inaccuracies in datasets. Data cleaning is a critical step in data analysis and data science, as the accuracy and reliability of any insights or decisions drawn from data depend on the quality of the underlying dataset. Poor-quality data can lead to incorrect conclusions, flawed business strategies, and wasted resources.

Some common tasks involved in cleaning data include:

- Removing duplicate records: Identifying and removing records that appear more than once ensures that each observation is unique. Duplicate records can distort statistical analyses, lead to incorrect counts, and inflate totals.

- Handling missing data: Missing values can occur due to human error, system glitches, or incomplete data collection. Cleaning missing data involves deciding whether to remove the incomplete records or impute missing values using methods such as mean/mode substitution, interpolation, or predictive modeling.

- Correcting inaccurate data: Inaccurate data can occur due to typos, misreporting, or outdated information. Cleaning inaccurate data involves verifying and correcting errors either manually or using automated techniques like pattern recognition, regular expressions, or cross-referencing with authoritative sources.

- Standardizing data: Standardization ensures that the data follows consistent formats. This can include converting dates to a standard format, ensuring consistent capitalization, formatting phone numbers or addresses uniformly, or unifying units of measurement.

- Removing outliers: Outliers are data points that deviate significantly from other observations. While some outliers represent valid extreme cases, others may be errors that can skew analyses. Identifying and managing outliers improves model accuracy and insight reliability.

- Checking for consistency: Ensuring that data is consistent with other datasets or historical records helps maintain reliability. For example, ensuring that product codes, customer IDs, and transactional data are consistent across multiple tables or sources.

- Data cleaning is iterative: Cleaning data is not a one-time process. Analysts often need to iterate between cleaning and analyzing to identify hidden errors, inconsistencies, or anomalies.

- Large datasets: In large datasets, errors and inconsistencies are harder to spot, which makes cleaning more complex. Automated tools are invaluable for processing millions of rows efficiently while maintaining accuracy.

- Manual vs. automated cleaning: Automated methods can speed up cleaning and ensure consistent application of rules, but manual checks are often necessary to verify edge cases and ambiguous data.

- Making assumptions: Sometimes missing or ambiguous data requires educated guesses. Documenting assumptions ensures transparency and allows others to understand and reproduce the analysis.

- Ethical and privacy considerations: Cleaning sensitive data may require removing personally identifiable information (PII) or ensuring compliance with privacy regulations. Ethical handling of data prevents unintentional harm to individuals.

In conclusion, cleaning data is critical for ensuring the accuracy and reliability of datasets. It involves multiple steps and requires attention to detail, ethical considerations, and a combination of manual and automated approaches.

Why Clean Data

Cleaning data is vital for several reasons that directly impact the quality of insights, decision-making, and organizational efficiency.

- Accurate Analysis: Cleaning data ensures that analyses are based on accurate and reliable data. Erroneous or inconsistent data can result in misleading insights and poor decision-making.

- Better Decision-making: Reliable data enables organizations to make informed decisions. Clean data ensures that reports, dashboards, and models reflect reality, supporting strategic planning.

- Improved Data Quality: High-quality data improves the overall usability of datasets. Clean data is more interpretable, easier to analyze, and provides better input for predictive modeling and machine learning applications.

- Cost-effectiveness: Cleaning data is often more cost-effective than collecting entirely new data. By improving the quality of existing data, organizations save time and resources while maximizing the value of existing assets.

- Regulatory Compliance: Many industries are subject to regulations requiring accurate and secure data management. Data cleaning can help comply with legal obligations, such as protecting PII or ensuring financial records are accurate.

- Enhanced Data Integration: Clean data integrates more smoothly with other datasets, avoiding errors and inconsistencies that can arise during merging or analysis.

- Trust and Credibility: Clean data fosters confidence in the results of analyses. Stakeholders are more likely to trust reports, forecasts, and recommendations derived from reliable data.

Overall, data cleaning ensures the foundation of analysis is strong, enabling accurate insights, better decision-making, and operational efficiency.

Data Cleaning Tools

Several tools and software applications are available to help automate and streamline the data cleaning process. Choosing the right tool depends on dataset size, complexity, and the desired level of automation.

- OpenRefine: A free, open-source tool for cleaning and transforming data. OpenRefine allows you to explore, clean, and normalize messy datasets efficiently. It supports structured and semi-structured data, such as CSV files, JSON, and XML.

- Trifacta: A commercial tool using machine learning and natural language processing to automate data cleaning. Trifacta provides visualization and profiling features to quickly detect anomalies, inconsistencies, and missing values.

- DataWrangler: A web-based tool for cleaning and transforming datasets. It helps users perform common cleaning tasks like reformatting, merging, and reshaping data, with an intuitive interface for interactive cleaning.

- Talend: A commercial data integration platform that includes data cleaning, transformation, and validation capabilities. Talend supports large datasets and integrates with multiple data sources and cloud platforms.

- Microsoft Excel: While not a dedicated data cleaning tool, Excel offers functionalities such as filtering, sorting, conditional formatting, and data validation to clean and standardize datasets.

- Python Libraries: Libraries like Pandas, NumPy, and OpenPyXL provide powerful scripting capabilities for automating cleaning tasks, handling missing data, detecting duplicates, and performing complex transformations.

- R Packages: Packages like dplyr, tidyr, and janitor in R enable systematic cleaning of datasets, including reshaping, standardization, and validation.

Choosing the right tool depends on the workflow, dataset size, automation requirements, and budget. Many organizations use a combination of tools to achieve the best results.

Data Cleaning Skills

Data cleaning requires a mix of technical, analytical, and soft skills. Successful data cleaning is not only about knowing the tools but also about understanding the data context and thinking critically about the process.

- Data Analysis: Understanding statistical concepts and methods helps identify anomalies, outliers, and inconsistencies in the data.

- Programming Skills: Proficiency in Python, R, SQL, or other programming languages allows automation of repetitive cleaning tasks and advanced data transformations.

- Data Profiling: Examining datasets to discover patterns, distributions, and potential errors is critical for effective cleaning.

- Attention to Detail: Detecting subtle inconsistencies, formatting issues, or anomalies requires careful inspection and thoroughness.

- Critical Thinking: Analysts must question assumptions, consider the data source, and understand potential biases that could affect the data quality.

- Communication: Collaboration with stakeholders and subject matter experts ensures that the cleaning process aligns with analytical goals and business requirements.

- Documentation: Clear documentation of cleaning steps, assumptions, and limitations ensures reproducibility and transparency.

Developing these skills requires practice, experience, and continuous learning. Analysts must stay updated with new tools, techniques, and best practices to effectively maintain data quality.

Data Cleaning Process

The data cleaning process generally involves several structured steps, ensuring that datasets are accurate, consistent, and analysis-ready.

- Define Goals: Establish the objectives of cleaning. Understand which analyses will be performed and which data elements are critical for accuracy.

- Data Profiling: Examine datasets for missing values, duplicates, anomalies, inconsistent formatting, and outliers. Profiling reveals the scope and nature of cleaning required.

- Develop Cleaning Plan: Create a systematic plan to address identified issues. Decide which tasks will be automated, which require manual verification, and the priority of each step.

- Data Cleaning: Implement the cleaning plan. Remove duplicates, correct errors, standardize formats, handle missing data, and address outliers.

- Data Verification: Validate the cleaning process by running quality checks, ensuring that the cleaned dataset meets the defined objectives.

- Document Process: Record each step, assumptions, and decisions made. Documentation is essential for reproducibility, transparency, and future reference.

- Iterative Review: Cleaning is often iterative. Re-examine data as analyses progress to uncover hidden inconsistencies or emerging issues.

By following a structured approach, organizations can maintain clean, reliable, and trustworthy datasets suitable for accurate analysis.

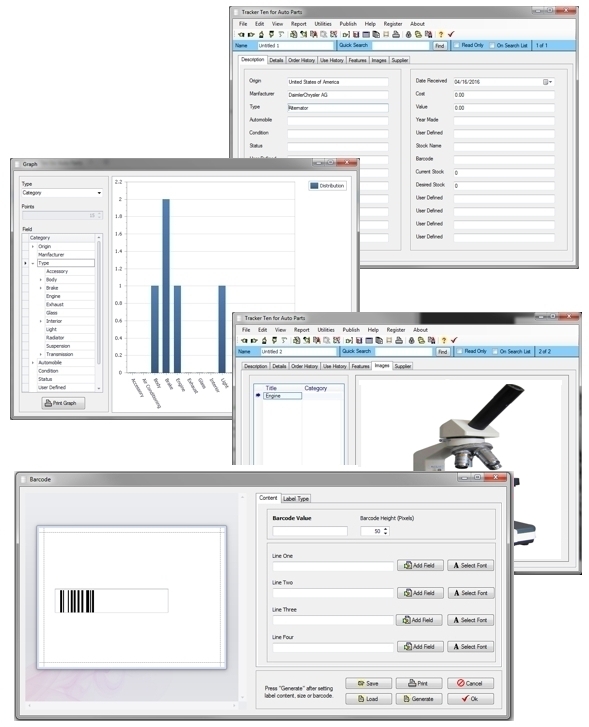

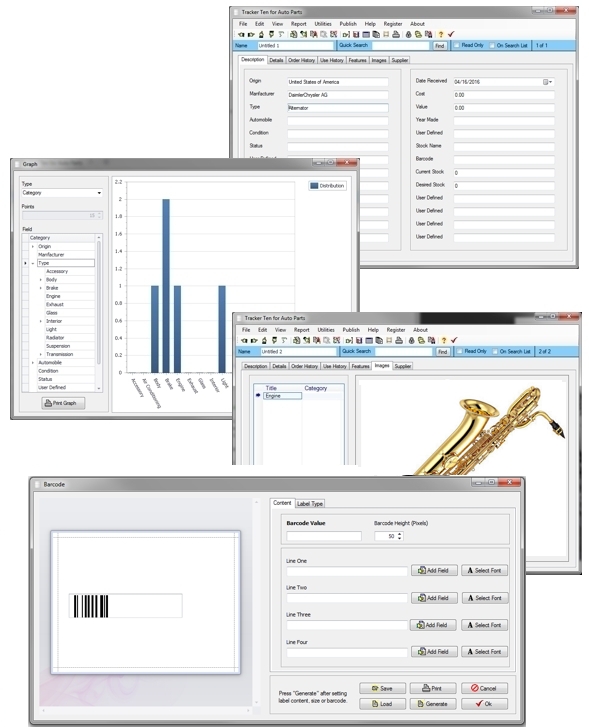

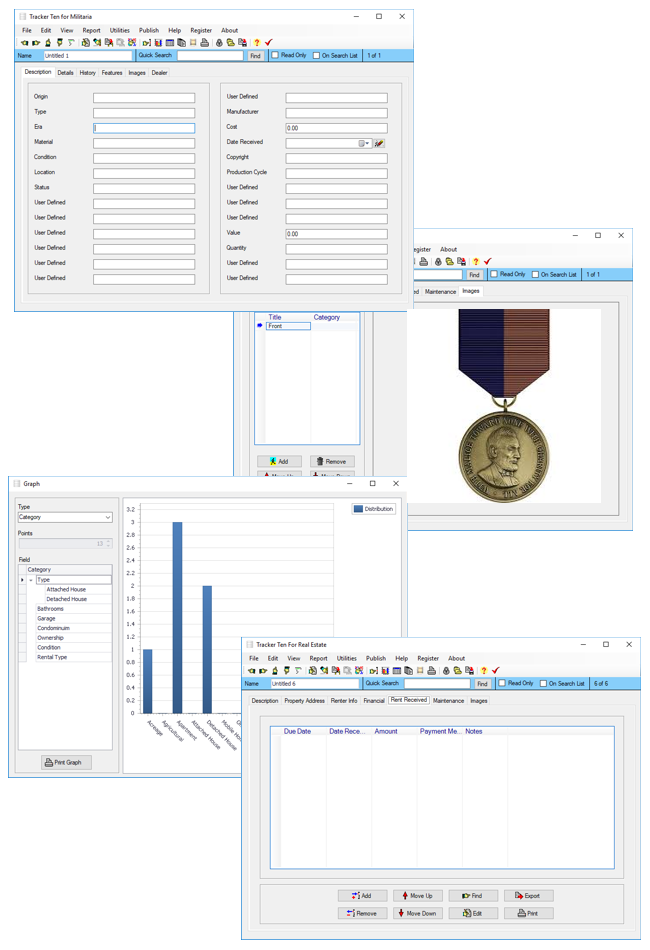

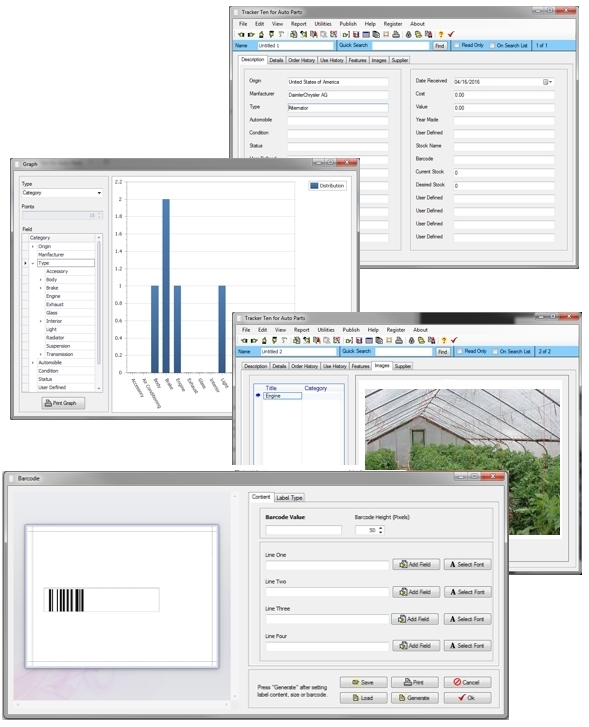

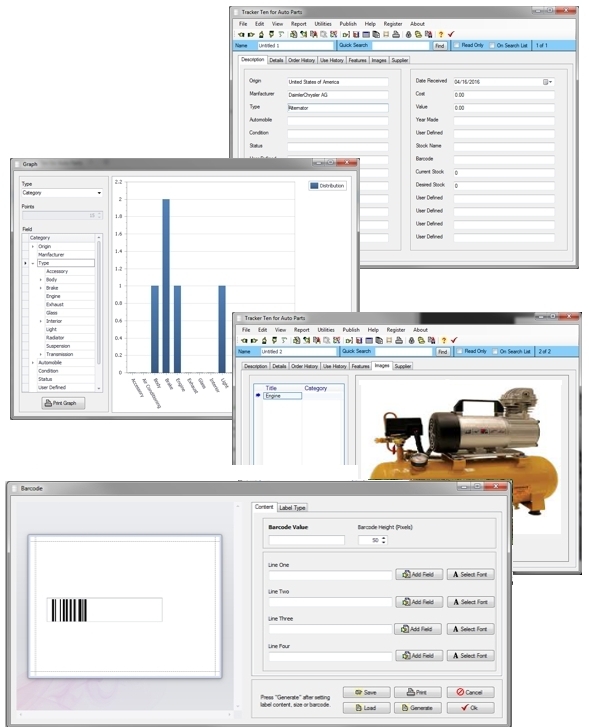

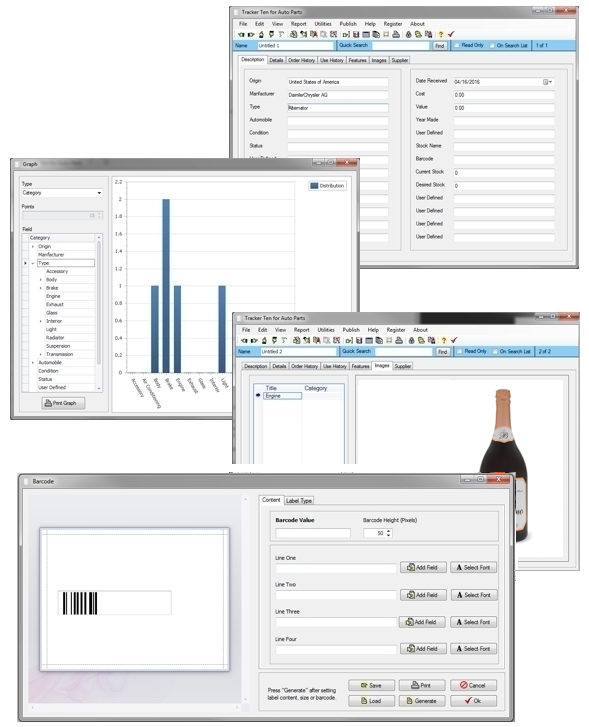

Database Software for Cleaned Data

After cleaning, storing data in a robust database system ensures it remains organized, accessible, and secure. Databases allow for efficient querying, reporting, and further analysis. Browse our site for various database solutions that help store, manage, and utilize your clean datasets efficiently.

Conclusion

Cleaning data is a foundational task in any data-driven organization. It ensures datasets are accurate, consistent, and reliable, supporting better decision-making, compliance, and operational efficiency. By leveraging tools, structured processes, and skilled analysts, organizations can maintain high-quality data that fuels meaningful insights, predictive modeling, and strategic planning.

Looking for windows database software? Try Tracker Ten

- PREVIOUS Databases and Data Types Wednesday, May 29, 2024

- NextInventory Management Best Practices Saturday, May 11, 2024