Database

Desktop Database Maintenance

Desktop Database Maintenance

Once a desktop database is installed, configured, and actively used, the work does not end there. In fact, the long-term success of any database system depends heavily on routine and well-planned maintenance. Desktop database maintenance is much like maintaining a home or an automobile: regular attention prevents small issues from becoming major failures. Without maintenance, performance degrades, data quality suffers, and recovery from unexpected failures becomes significantly more difficult.

Maintaining a database ensures that your data remains accurate, accessible, and secure. It allows you to verify data integrity, detect early signs of corruption, optimize performance, and plan for future growth. Maintenance also gives you insight into how your data is being used, which tables are growing the fastest, and whether your current hardware resources are still sufficient. This proactive approach can save substantial time and money compared to reacting to emergencies after something goes wrong.

Another critical reason for routine database maintenance is backup reliability. If problems such as corruption or logical errors go unnoticed, those issues may be silently copied into your backup files. When you eventually need to restore data, you may discover that all available backups are unusable. Regular maintenance helps ensure that both your live database and your backups remain healthy.

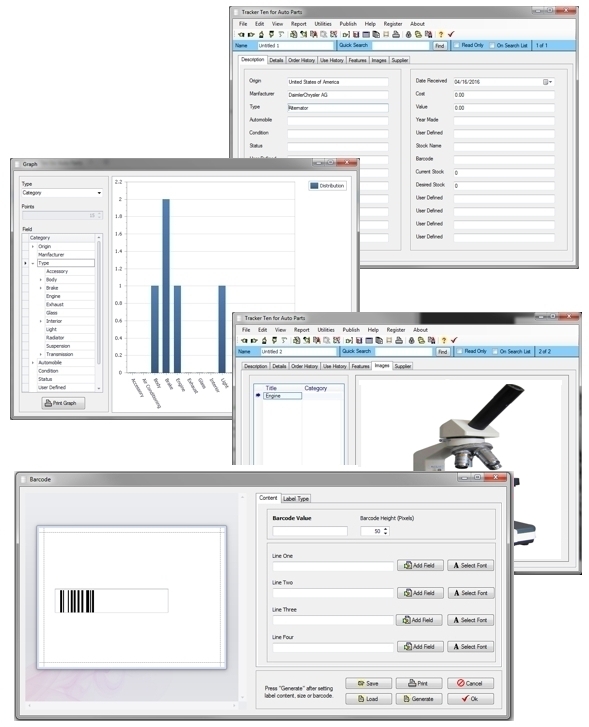

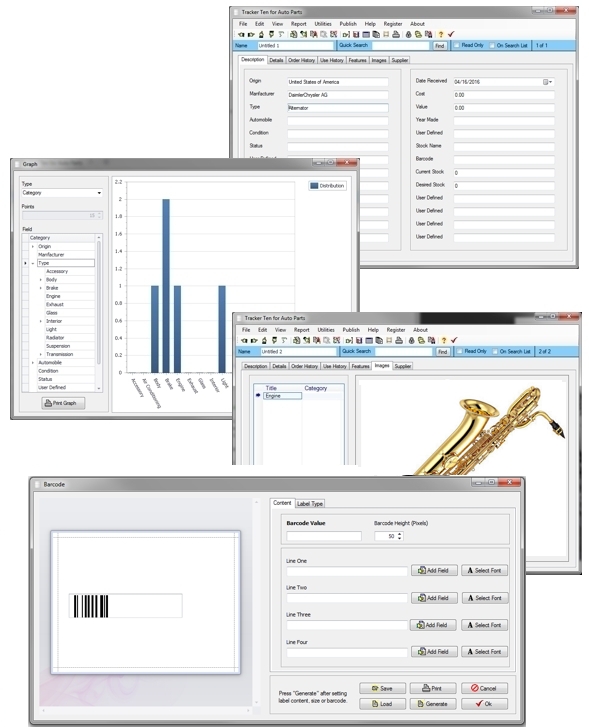

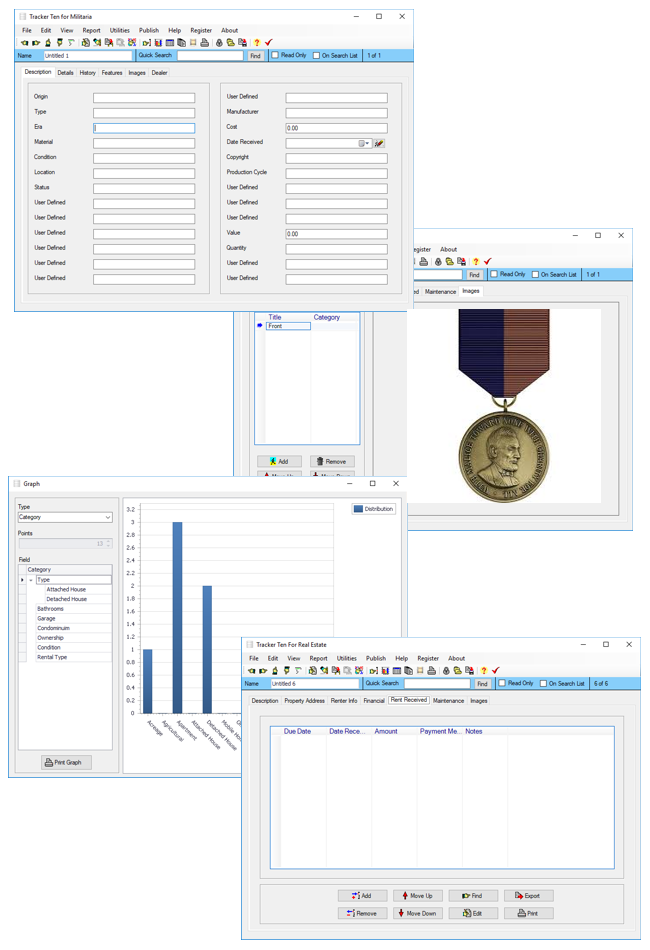

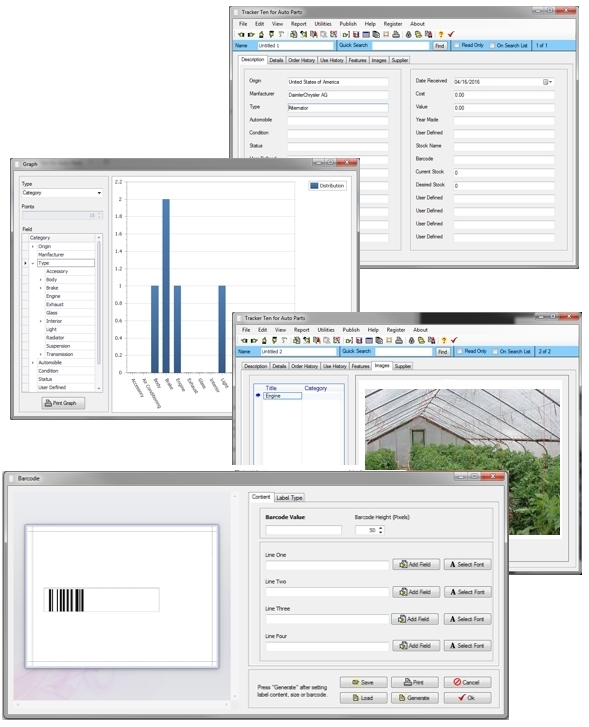

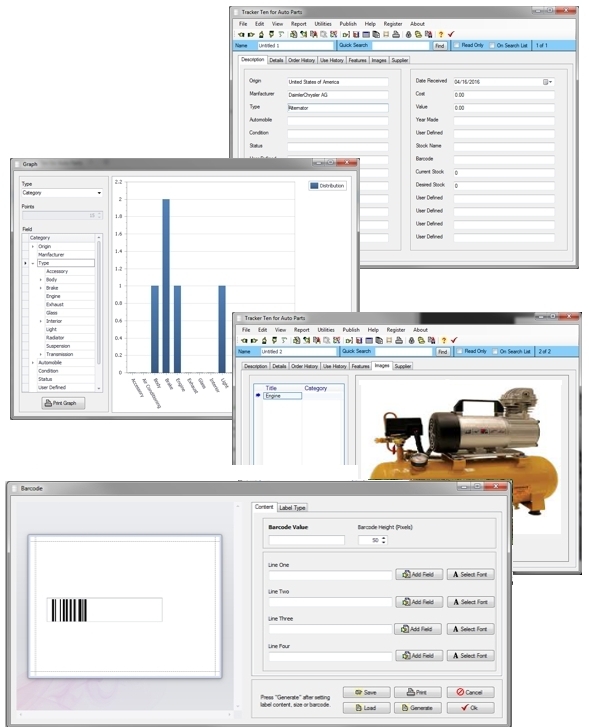

Whether you are working with an enterprise-grade database such as Microsoft SQL Server, Oracle, or MySQL, or a desktop-oriented solution like Tracker Ten, the fundamental principles of database maintenance remain the same. While the tools and interfaces may differ, the goals—stability, performance, and data integrity—are universal.

![]()

Understanding the Importance of Desktop Database Maintenance

Desktop databases are often used by small businesses, home offices, research teams, and individuals who rely on them for daily operations. Because they typically run on local machines rather than dedicated servers, desktop databases are more closely tied to the health of the operating system, hardware, and user behavior. This makes maintenance even more important.

Over time, desktop databases naturally grow as new records are added. Files become larger, indexes become fragmented, and queries may take longer to run. Users may add inconsistent data, create redundant records, or accidentally introduce errors. Software updates, operating system changes, or unexpected shutdowns can also affect database stability. Routine maintenance helps keep all of these factors under control.

Another key aspect of maintenance is planning. By regularly reviewing database statistics and performance metrics, you can anticipate future needs. For example, if your database file size has doubled every year, you can plan hardware upgrades before disk space becomes a problem. If certain queries are becoming slower, you can address performance bottlenecks before users become frustrated.

Creating a Database Maintenance Plan

The most effective way to manage database maintenance is to create a structured maintenance plan. A maintenance plan is a documented set of tasks, schedules, and responsibilities that ensure essential maintenance activities are performed consistently. Even a simple plan can dramatically reduce the risk of data loss and performance issues.

A comprehensive maintenance plan may include procedures for full backups, incremental or differential backups, index rebuilding, database shrinking, statistics updates, integrity checks, and log monitoring. The exact contents of your plan will depend on the database system you are using, the size of your database, and how critical the data is to your operations.

Enterprise systems such as Microsoft SQL Server include built-in tools like SQL Server Management Studio (SSMS), which offers maintenance plan wizards. These wizards guide users through the process of setting up scheduled maintenance tasks. Other systems may rely on scripts, command-line tools, or third-party utilities.

While some maintenance activities require advanced technical knowledge, many essential tasks are well within the reach of non-expert users. For example, creating a full database backup is usually straightforward and should be performed regularly. More advanced users may choose to implement differential backups or transaction log backups to reduce backup times and storage requirements.

Automation plays an important role in any maintenance plan. By scheduling routine tasks to run automatically, you reduce the risk of forgetting critical steps. However, automation should always be paired with monitoring and verification to ensure tasks are completing successfully.

Shrinking Your Database

As a database grows, unused space can accumulate within its files. This can happen when records are deleted, large data imports are reversed, or temporary data structures are created and removed. Shrinking a database reclaims this unused space, reducing file size and potentially improving performance.

One effective way to reduce database size is through data archiving. Archiving involves moving old or infrequently accessed records into a separate database or storage system. This keeps the primary database lean and focused on current data. Deciding when to archive data depends on business requirements, regulatory obligations, and how often historical data is accessed.

Many database systems provide built-in shrinking tools. In Microsoft SQL Server, for example, you can right-click a database in SQL Server Management Studio and select the shrink option. The system then reorganizes data pages to eliminate empty space within the file.

However, database shrinking should be used with caution. Shrink operations can cause significant index fragmentation, which may degrade performance. After shrinking a database, it is often necessary to rebuild or reorganize indexes to restore optimal performance. Because of this, shrinking should not be performed routinely without a clear reason.

Another maintenance consideration is backup management. Old backup files can consume a large amount of disk space. Regularly reviewing and deleting obsolete backups can free up storage without affecting the live database. If disk space remains an issue, adding additional storage or upgrading hardware may be necessary.

Generating Database Statistics

Database statistics provide valuable insight into how data is stored and accessed. These statistics describe characteristics such as the number of rows in each table, the distribution of values within columns, and the frequency with which queries are executed. Maintaining accurate statistics is essential for both performance tuning and capacity planning.

By reviewing statistics, you can identify which tables contain the most data and which are growing the fastest. Tables with high row counts may benefit from additional indexing or partitioning. Statistics also help you understand which queries consume the most resources, such as CPU time or disk I/O.

If certain queries are consistently slow, you may be able to refactor them to improve efficiency. This might involve rewriting the query, adding indexes, or adjusting how data is stored. When optimization is not possible at the query level, hardware upgrades such as faster processors or additional memory may be required.

Many databases automatically generate logs that record system activity, errors, and performance metrics. These logs can be invaluable for diagnosing problems and identifying trends. In SQL Server, logs can be accessed directly through SQL Server Management Studio. Other systems may require manual configuration to enable logging.

Modern database engines also use statistics internally to optimize query execution. This process, known as cost-based optimization, allows the database to choose the most efficient execution plan based on estimated resource usage. For this optimization to work effectively, statistics must be up to date. Many systems allow you to manually trigger statistics updates when needed.

Ensuring Database Integrity

Data integrity refers to the accuracy, consistency, and validity of data stored in a database. Maintaining integrity is one of the most important goals of database maintenance, especially in environments where data is shared among multiple users or systems.

There are several types of data integrity that must be considered. Entity integrity ensures that each record in a table can be uniquely identified, usually through a primary key. This prevents duplicate records and ensures that each entity is distinct.

Referential integrity ensures that relationships between tables remain valid. For example, if one table references records in another table, those referenced records must exist. Referential integrity prevents orphaned records and maintains logical consistency across the database.

Domain integrity ensures that values stored in a column are valid for that column. This might include enforcing data types, value ranges, or predefined lists of acceptable values. For example, a state field should not contain city names, and a numeric field should not contain text.

User-defined integrity includes any additional business rules specific to your application. These rules may enforce constraints such as minimum order quantities, valid date ranges, or conditional requirements.

If integrity issues are discovered, it is important to identify their root cause. Errors may result from user mistakes, poorly designed forms, software bugs, or malicious activity such as malware. Addressing the cause helps prevent the same issues from recurring.

Detecting Database Corruption

Database corruption occurs when data stored on disk becomes unreadable or inconsistent. Corruption can be caused by hardware failures, software bugs, improper shutdowns, or file system issues. Early detection is essential to prevent data loss and minimize recovery time.

One of the first lines of defense against corruption is monitoring the health of storage devices. Modern operating systems include tools that check disk integrity using techniques such as checksums, parity bits, and cyclic redundancy checks. These methods work by storing additional verification data alongside actual data. If discrepancies are detected, corruption is likely present.

While hardware reliability has improved significantly, software-related corruption is still a concern. Database systems such as Oracle and SQL Server include built-in validation tools that can analyze tables and indexes for consistency issues. Running these checks regularly helps identify problems before they escalate.

Database logs are another valuable source of information. Unexpected errors, crashes, or warnings recorded in logs may indicate underlying corruption or instability. Regularly reviewing logs allows administrators to spot patterns and take corrective action.

Detecting corruption early helps prevent direct corruption, where valid data is overwritten with invalid data. It also prevents indirect corruption, where corrupted data is used to update other records, spreading errors throughout the database. Preventing this cascade requires strict access controls, validation rules, and careful monitoring of automated processes.

Backup Verification and Recovery Testing

Backups are only useful if they can be restored successfully. As part of regular maintenance, backups should be periodically verified and tested. Verification ensures that backup files are complete and readable, while recovery testing confirms that data can be restored in a real-world scenario.

Testing restores does not necessarily mean overwriting your live database. Restores can be performed on a separate system or into a test environment. This process helps identify issues such as missing files, incompatible versions, or corrupted backups.

Regular recovery testing also ensures that you understand the restoration process. In an emergency, clear procedures and familiarity with the process can significantly reduce downtime.

Security and Maintenance

Security is an integral part of database maintenance. Over time, user accounts may accumulate, permissions may change, and outdated access rights may persist. Regularly reviewing user permissions helps ensure that users have only the access they need.

Keeping database software up to date is another critical security measure. Updates and patches often address vulnerabilities, bugs, and performance issues. Applying updates as part of a maintenance routine reduces the risk of exploitation and instability.

Conclusion

Desktop database maintenance is not a one-time task but an ongoing process that supports data reliability, performance, and security. By creating a structured maintenance plan, monitoring database health, ensuring data integrity, and proactively addressing issues, you can extend the life of your database and protect the valuable information it contains.

Whether you are using a powerful enterprise database or a user-friendly desktop solution like Tracker Ten, regular maintenance empowers you to stay in control of your data. A well-maintained database is faster, safer, easier to manage, and far more resilient in the face of unexpected challenges.

Looking for windows database software? Try Tracker Ten

- PREVIOUS Single Source of Truth in Databases Saturday, May 27, 2023

- NextCreating a Hobby Database Saturday, May 20, 2023