Programming

Integrating with the Wikipedia & Wikidata APIs Using Python

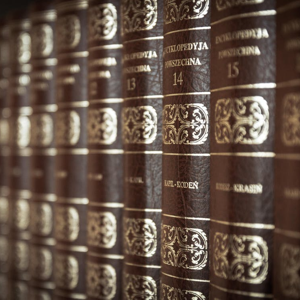

Wikipedia and Wikidata are two of the most influential open-knowledge platforms in the world. Wikipedia provides human-readable encyclopedic content in hundreds of languages, while Wikidata serves as a structured, machine-readable knowledge base that underpins Wikipedia and many other projects.Together, they form a powerful ecosystem for accessing facts, relationships, and contextual information about nearly any topic imaginable.

For developers, data scientists, researchers, and educators, the Wikipedia and Wikidata APIs offer a rich opportunity to integrate authoritative, community-maintained knowledge into applications.Whether you are building a search engine, enriching datasets, powering a chatbot, performing entity resolution, or conducting large-scale analysis, these APIs provide flexible and well-documented access to open data.

This article provides an in-depth, practical guide to integrating with both the Wikipedia and Wikidata APIs using Python.It explains how the APIs differ, how they complement each other, how to query them effectively, and how to design robust integrations.All examples use Python and focus on real-world usage patterns, best practices, and common pitfalls.

Understanding Wikipedia and Wikidata

Although closely related, Wikipedia and Wikidata serve different purposes.Wikipedia is designed primarily for human readers.Its articles are written in natural language, formatted for readability, and intended to provide narrative explanations.The Wikipedia API focuses on retrieving article content, summaries, page metadata, and links.

Wikidata, on the other hand, is a structured database of entities and relationships.Each entity, known as an item, is identified by a unique Q identifier, such as Q42 for Douglas Adams.Properties describe relationships and attributes, such as dates, locations, identifiers, and connections to other entities.Wikidata is designed for machines first, making it ideal for data integration and analysis.

In many applications, the two APIs are used together.Wikipedia provides descriptive context, while Wikidata supplies structured facts.Understanding when to use each is key to building effective integrations.

Why Integrate with Wikipedia and Wikidata?

There are many compelling reasons to integrate with these APIs.They offer free, open access to a vast body of knowledge maintained by a global community.The data is continuously updated, multilingual, and richly interconnected.

Common use cases include enriching user-generated content, validating or supplementing internal datasets, building educational tools, powering knowledge graphs, performing named entity recognition, and supporting question-answering systems.Because the data is open, it can be reused in both commercial and non-commercial projects, subject to licensing requirements.

Setting Up Your Python Environment

To interact with both APIs, you need a modern Python environment.Python 3.8 or newer is recommended.The primary library used in this article is requests, which simplifies HTTP requests.

You can install it using pip:

pip install requestsFor more advanced data handling, libraries such as json, pandas, and datetime can be useful, but they are optional.All examples here rely only on standard Python and requests.

Overview of the Wikipedia API

The Wikipedia API is built on top of MediaWiki, the software that powers Wikipedia.The primary endpoint is https://en.wikipedia.org/w/api.php.Requests are made using query parameters that specify actions, formats, and filters.

The API supports many operations, including searching articles, retrieving page content, fetching summaries, listing links, and accessing revision history.Responses are typically returned in JSON format, which is easy to process in Python.

Searching Wikipedia Articles

A common starting point is searching for articles by keyword.The following Python example demonstrates how to search Wikipedia for pages related to "Python programming".

import requestsurl = "https://en.wikipedia.org/w/api.php"params = { "action": "query", "list": "search", "srsearch": "Python programming", "format": "json"}response = requests.get(url, params=params)response.raise_for_status()data = response.json()for result in data["query"]["search"]: print(result["title"])This request uses the search list to return matching article titles and snippets.From here, you can retrieve full page content or summaries for individual articles.

Retrieving Article Summaries

For many applications, you only need a concise summary rather than the full article text.Wikipedia provides a REST-style endpoint specifically for summaries.

summary_url = "https://en.wikipedia.org/api/rest_v1/page/summary/Python_(programming_language)"response = requests.get(summary_url)response.raise_for_status()summary_data = response.json()print(summary_data["extract"])This endpoint is simple, efficient, and ideal for user-facing applications such as previews, tooltips, or knowledge panels.

Retrieving Full Article Content

When full article content is required, you can request page revisions through the MediaWiki API.This provides access to wikitext or rendered HTML.

params = { "action": "query", "prop": "extracts", "titles": "Python (programming language)", "explaintext": True, "format": "json"}response = requests.get(url, params=params)data = response.json()pages = data["query"]["pages"]for page_id, page in pages.items(): print(page["extract"][:500])This approach returns the article text without markup, making it easier to process programmatically.

Introduction to the Wikidata API

While Wikipedia focuses on articles, Wikidata focuses on data.The primary Wikidata API endpoint is https://www.wikidata.org/w/api.php.In addition, Wikidata offers a powerful SPARQL endpoint for complex queries.

Each Wikidata item represents a concept, person, place, or object.Items have statements composed of properties and values, which may themselves be other items or literal values.

Searching for Wikidata Items

The first step when working with Wikidata is often to search for an item by name.

url = "https://www.wikidata.org/w/api.php"params = { "action": "wbsearchentities", "search": "Python programming language", "language": "en", "format": "json"}response = requests.get(url, params=params)data = response.json()for result in data["search"]: print(result["id"], result["label"], "-", result["description"])This returns matching entities along with their identifiers.Once you have an item ID, you can retrieve detailed structured data.

Retrieving Structured Data from Wikidata

After identifying an item, you can request its full entity data.

item_id = "Q28865"params = { "action": "wbgetentities", "ids": item_id, "format": "json"}response = requests.get(url, params=params)data = response.json()entity = data["entities"][item_id]print(entity["labels"]["en"]["value"])The returned structure includes labels, descriptions, aliases, and claims.Claims represent properties and their values.Parsing these requires understanding Wikidata’s data model.

Understanding Wikidata Properties and Claims

Properties in Wikidata are identified by P numbers, such as P31 for "instance of" or P569 for "date of birth".Each claim may include qualifiers and references, adding richness and provenance.

Extracting values often involves navigating nested dictionaries.For example, retrieving the "instance of" property requires iterating over claims and resolving item IDs to human-readable labels.

Using the Wikidata SPARQL Endpoint

For complex queries, Wikidata provides a SPARQL endpoint at https://query.wikidata.org/sparql.SPARQL allows you to query relationships across the entire dataset.

query = """SELECT ?item ?itemLabel WHERE { ?item wdt:P31 wd:Q9143. SERVICE wikibase:label { bd:serviceParam wikibase:language "en". }}LIMIT 5"""headers = { "Accept": "application/sparql+json"}response = requests.get( "https://query.wikidata.org/sparql", headers=headers, params={"query": query})data = response.json()for binding in data["results"]["bindings"]: print(binding["itemLabel"]["value"])This example retrieves a list of programming languages.SPARQL is extremely powerful and well-suited for analytics and knowledge graph applications.

Combining Wikipedia and Wikidata

One of the most powerful patterns is combining both APIs.Wikipedia articles often link to their corresponding Wikidata items.You can use Wikidata for structured facts and Wikipedia for descriptive text.

For example, you might retrieve an article summary from Wikipedia and augment it with birth dates, coordinates, or identifiers from Wikidata.This hybrid approach produces richer and more flexible results.

Error Handling and Reliability

As with any external API, robust error handling is essential.Network errors, missing pages, ambiguous search results, and schema changes can all occur.

Using timeouts, checking HTTP status codes, and validating response structures will make your integration more resilient.Gracefully handling missing data is especially important with community-maintained datasets.

Performance and Caching

Both Wikipedia and Wikidata encourage responsible usage.Repeatedly fetching the same data should be avoided.Caching responses locally or in memory can dramatically improve performance and reduce load on the APIs.

For large-scale applications, batching requests and using SPARQL queries instead of many small calls can also improve efficiency.

Licensing and Attribution

Content from Wikipedia and data from Wikidata are provided under open licenses.Wikipedia text is licensed under Creative Commons Attribution-ShareAlike, while Wikidata data is released under CC0.

Applications must comply with these licenses, particularly with respect to attribution and redistribution of modified content.Understanding licensing requirements is an essential part of responsible integration.

Common Use Cases

Integrations with Wikipedia and Wikidata are used in search engines, digital assistants, educational platforms, data enrichment pipelines, recommendation systems, and research tools.Their openness and breadth make them suitable for projects ranging from small prototypes to large-scale production systems.

Conclusion

Integrating with the Wikipedia and Wikidata APIs using Python provides access to one of the richest open knowledge ecosystems available.Wikipedia offers human-readable context, while Wikidata supplies structured, machine-friendly facts.Together, they enable powerful applications that combine narrative understanding with precise data.

By mastering the APIs, understanding the underlying data models, and following best practices for performance, error handling, and licensing,developers can build robust and future-proof systems.As open knowledge continues to grow in importance, the ability to integrate with Wikipedia and Wikidata is an increasingly valuable skill.

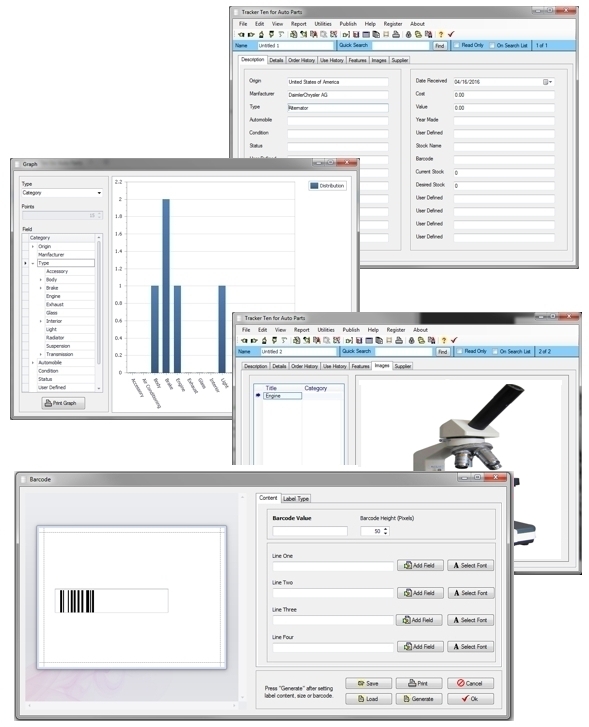

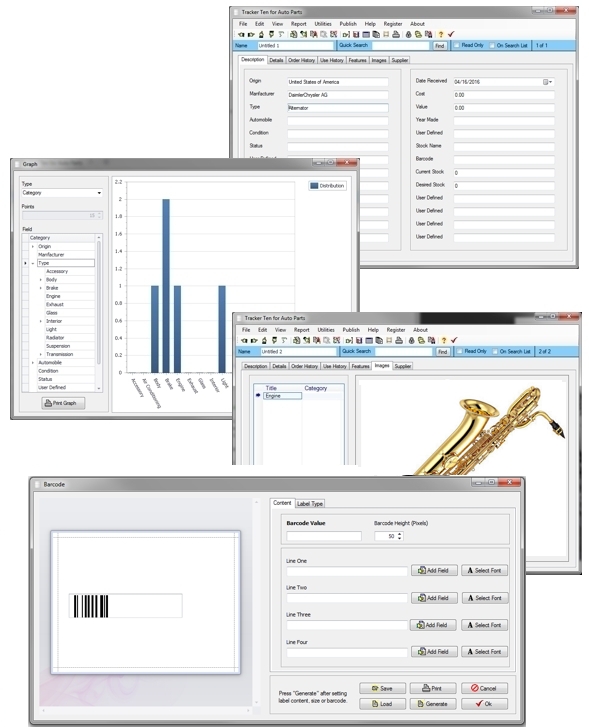

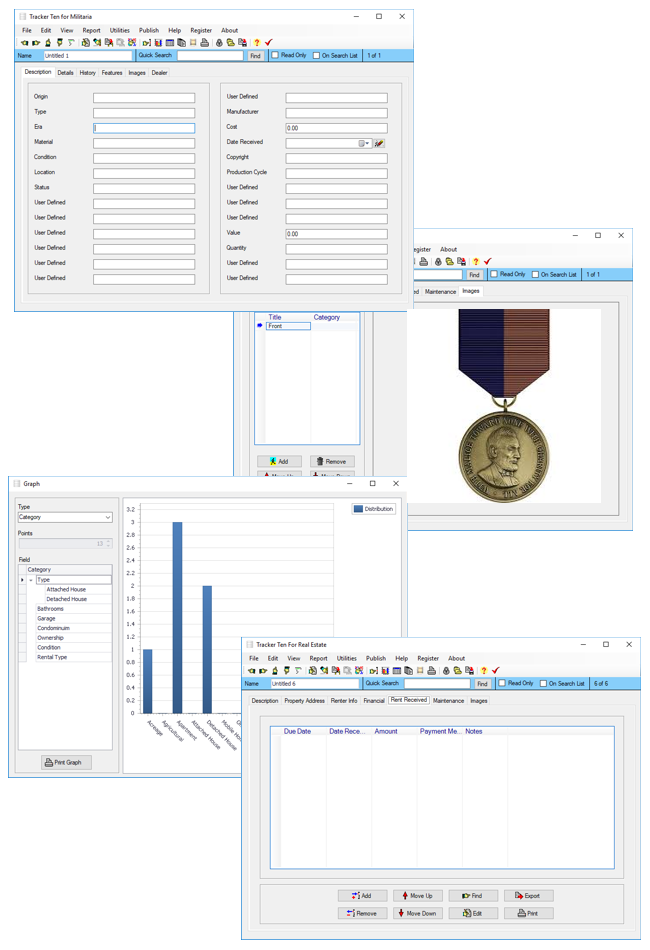

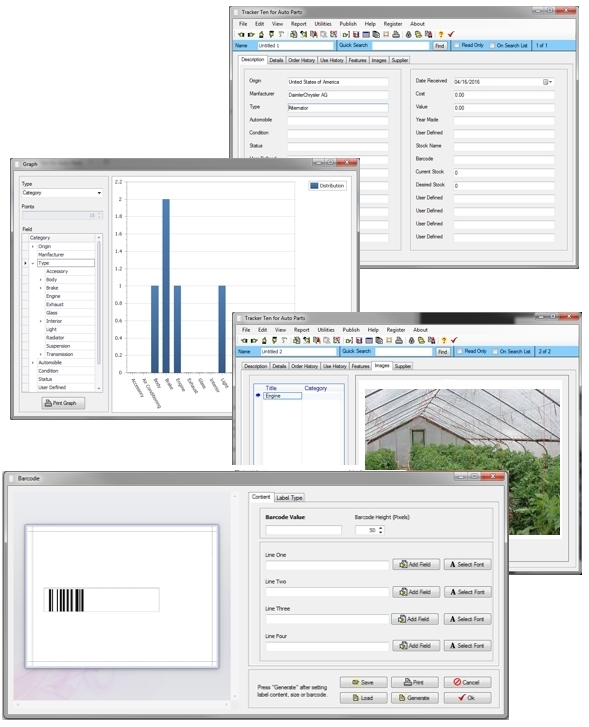

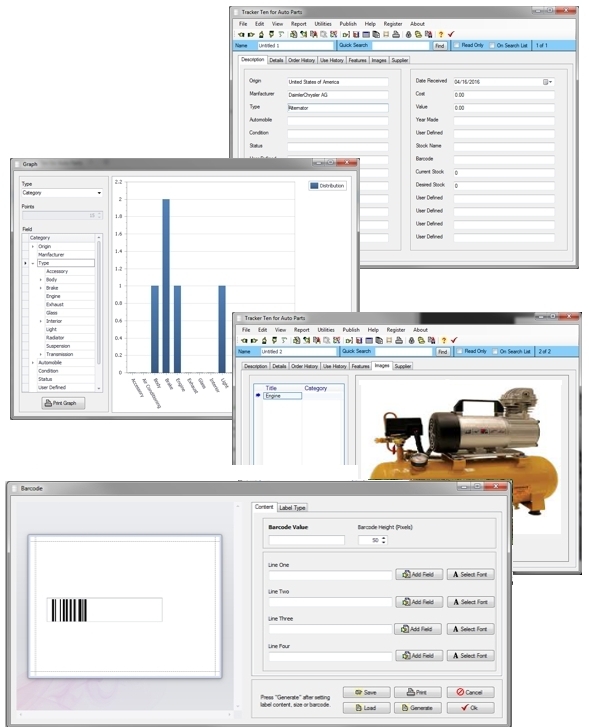

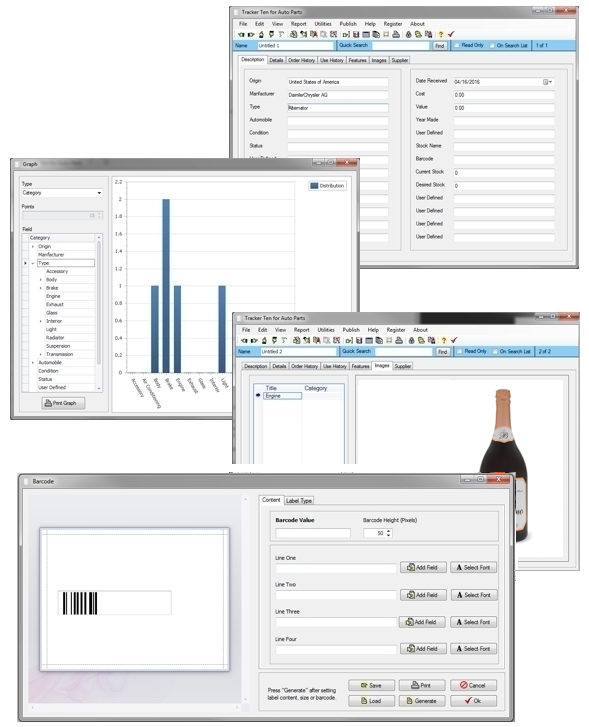

Looking for windows database software? Try Tracker Ten

- PREVIOUS Backing Up Files On A Local File System Saturday, March 9, 2024

- NextDesigning a Single User Database Schema Monday, March 4, 2024