Database

Sorting Data

Sorting data refers to the process of arranging data in a particular order, typically ascending or descending, based on one or more attributes or criteria. Sorting is one of the most fundamental operations in data processing and is essential for organizing, analyzing, and interpreting information efficiently. Properly sorted data makes it easier to identify trends, detect anomalies, and perform a wide range of computational operations.

Sorting is not limited to numbers or alphabetic strings. It can be applied to more complex data structures such as arrays, lists, and tables, as well as multi-dimensional datasets. By arranging data logically, users can quickly locate items, compare records, and derive insights that may not be evident in unsorted datasets.

The process of sorting has evolved over decades, from simple manual techniques to sophisticated algorithms that can handle massive datasets efficiently. Today, sorting is used extensively in databases, spreadsheets, programming applications, and big data systems.

Sorting is also a key skill for data analysts, scientists, and developers. It helps in preparing data for analysis, cleaning datasets, and optimizing computational processes. Effective sorting can reduce processing time, improve data integrity, and enable accurate decision-making.

Why Sort Data

Sorting data serves multiple purposes in data processing and analysis. Its benefits extend beyond mere organization and can enhance efficiency, accuracy, and insight generation.

Identification of patterns: Sorting allows users to detect patterns, trends, or anomalies that might not be obvious in unsorted data. For example, arranging sales data by product category or region can reveal top-performing products or regions with declining sales.

Easy retrieval: Sorted data enables faster access. Telephone directories, inventory lists, and customer databases are typically sorted alphabetically or numerically for quick reference.

Efficient searching: Sorting facilitates efficient searching using algorithms such as binary search, which can locate items quickly in a sorted list by repeatedly dividing the search space in half.

Data analysis: Sorting often precedes analytical tasks like aggregation, summarization, and filtering. Financial data sorted by date allows analysts to track revenue trends, seasonal patterns, and other time-based insights.

Optimization: Sorting can optimize operational tasks, including file I/O, database queries, and network communication. For instance, sorted datasets reduce the need for repeated disk access or improve the performance of query operations.

Overall, sorting is an essential operation that improves the usability, efficiency, and analytical value of data. It ensures that datasets are structured in a meaningful way for decision-making and processing.

Data Sorting Techniques

There are many techniques to sort data, ranging from simple manual methods to highly sophisticated computational algorithms. The choice of technique depends on the data size, complexity, required performance, and available software tools.

Bubble Sort: A simple algorithm that repeatedly compares adjacent elements and swaps them if they are in the wrong order. While easy to implement, bubble sort is inefficient for large datasets.

Insertion Sort: Divides the list into sorted and unsorted sections. Elements from the unsorted section are inserted into the correct position in the sorted section. Efficient for small or partially sorted datasets.

Selection Sort: Finds the smallest (or largest) element and swaps it with the first unsorted element. The process repeats until the list is sorted. Simple but less efficient than other algorithms for large datasets.

QuickSort: A divide-and-conquer algorithm that selects a pivot, partitions the dataset into elements less than and greater than the pivot, and recursively sorts each partition. QuickSort is highly efficient and widely used in practice.

Merge Sort: Divides the dataset into smaller sublists, sorts them, and merges them into a single sorted list. Merge sort guarantees stability and is suitable for large datasets.

Heap Sort: Uses a binary heap structure to repeatedly extract the largest element and place it at the end of the list. Heap sort is efficient and ensures predictable time complexity.

The choice of sorting technique should consider computational complexity, dataset size, memory usage, and the need for stability. Some algorithms, like QuickSort, are fast but not stable, while Merge Sort is stable and predictable in performance.

Sorting Large Datasets

Sorting small datasets is straightforward, but large datasets present unique challenges. Memory limitations, processing time, and hardware constraints necessitate specialized techniques for efficient sorting.

External Sorting: Large datasets are divided into smaller chunks that fit into memory, sorted individually, and then merged. External sorting is common in databases and file systems.

Parallel Sorting: Multiple processors or machines sort subsets of the dataset concurrently. This technique is used in high-performance computing and distributed systems.

Sampling: A subset of the dataset is sorted, and this sorted order guides the organization of the remaining data. Useful for massive datasets where full sorting at once is impractical.

Bucket Sorting: Divides data into buckets based on value ranges and sorts within each bucket. Efficient for datasets with uniform value distribution.

Distributed Sorting: Data is split across multiple nodes in a cluster. Each node sorts its portion, and the results are merged. Common in big data frameworks like Hadoop or Spark.

External Merge Sort: A variation of merge sort used for datasets too large for memory. Chunks are sorted in memory and merged sequentially from disk.

Efficient sorting of large datasets requires balancing memory usage, processing power, and algorithm complexity. Choosing the right technique can dramatically reduce computational overhead.

Choosing a Sort Key

The sort key determines the attribute by which data is ordered. Selecting an appropriate sort key is critical for meaningful and accurate sorting.

Relevance: The key should be meaningful to the task. Sorting customers by purchase history may be more relevant for marketing than by name.

Uniqueness: High uniqueness reduces ambiguity. Sorting sales by transaction ID is more reliable than by customer name.

Size: Smaller keys use less memory and are faster to process. Large text fields may slow sorting operations.

Stability: Stable sorting algorithms maintain the original order of records with identical keys. This is important when preserving chronological or logical order.

Compatibility: The key should match the data type. Sorting dates as strings may produce incorrect order, whereas numeric or datetime types are appropriate.

Frequency of use: Frequently used keys are more valuable for sorting, retrieval, and analysis.

Careful selection of the sort key ensures that sorted data supports the intended analysis and operations efficiently.

Data Sorting Pitfalls

While sorting is essential, improper implementation can introduce errors or inefficiencies. Common pitfalls include:

Inefficient algorithms: Using algorithms like bubble sort on large datasets leads to slow performance. Choose algorithms suited to data size and structure.

Memory limitations: Sorting large datasets in memory may fail. External or distributed sorting methods may be required.

Data integrity: Unstable sorts can rearrange records unexpectedly, causing data loss or misinterpretation.

Incorrect sort key: Sorting by an inappropriate attribute can lead to misleading results.

Over-reliance on sorting: Sorting is useful, but sometimes filtering, grouping, or aggregation is more efficient for analysis.

Awareness of these pitfalls helps avoid errors and ensures accurate, reliable data processing.

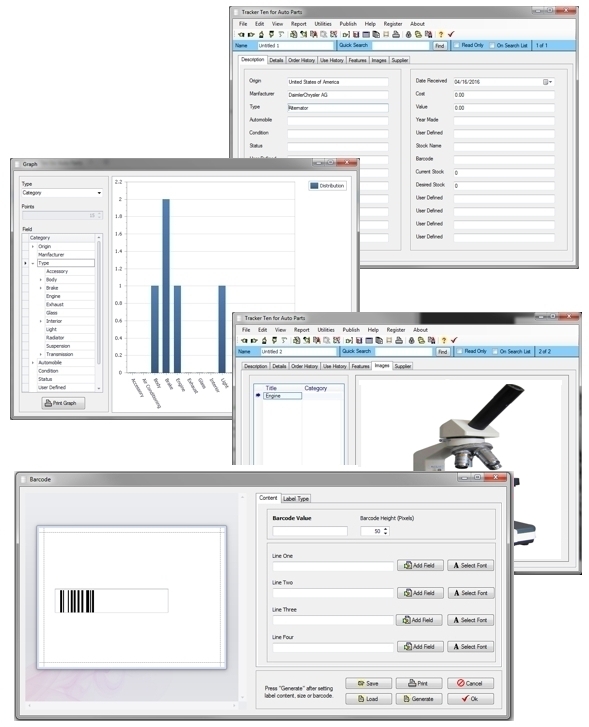

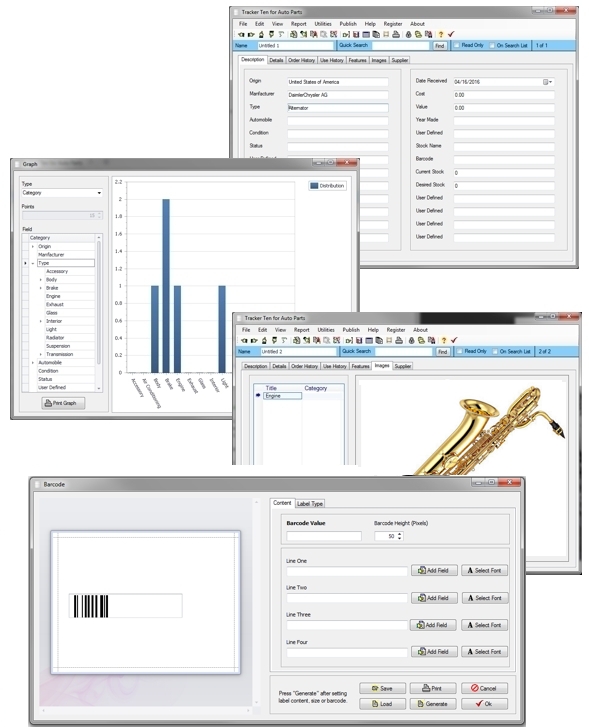

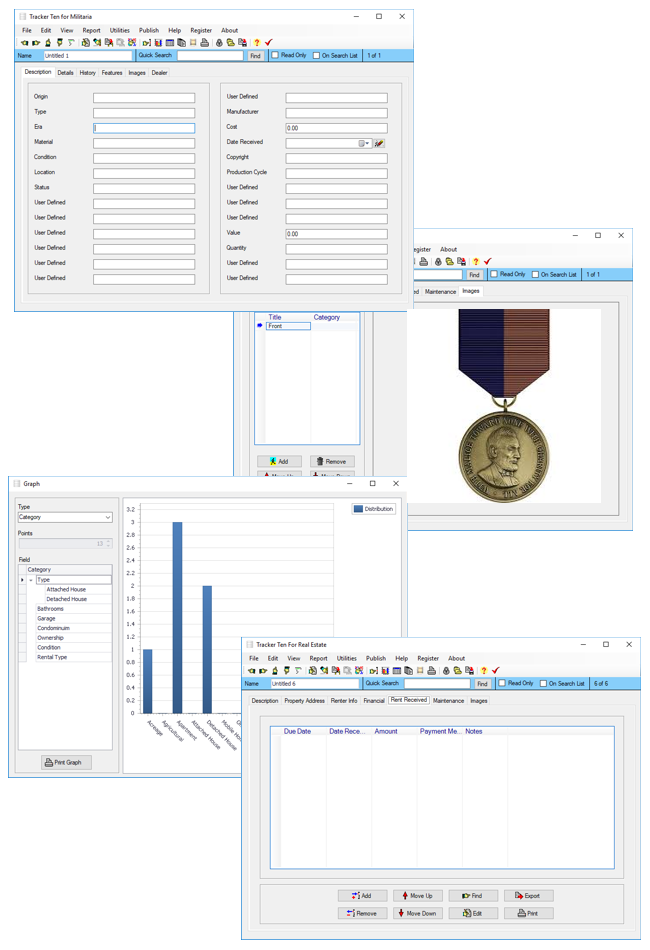

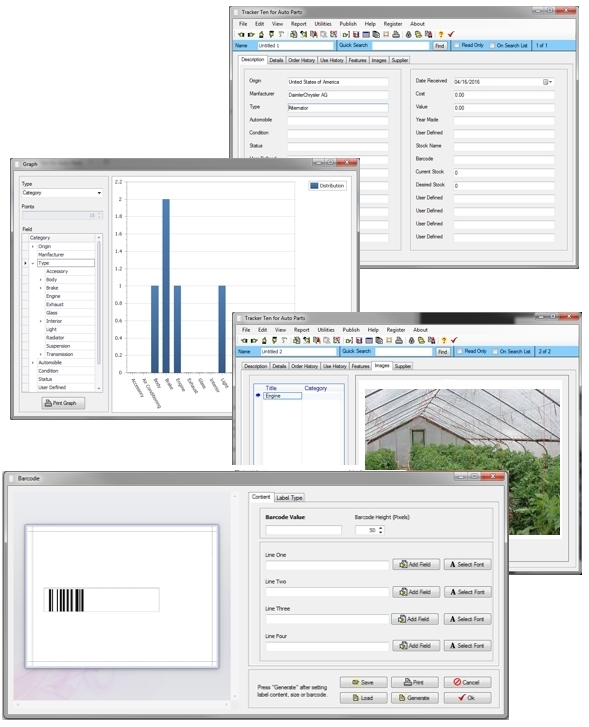

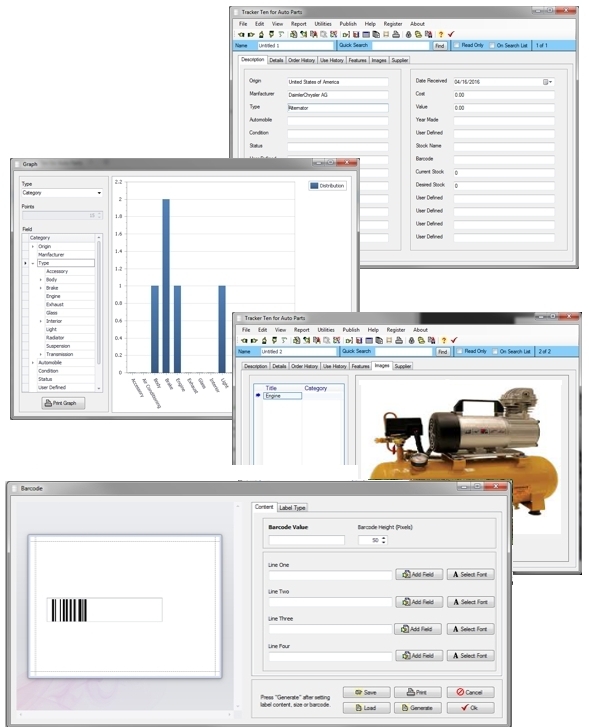

Database Software with Sort Features

Most database and spreadsheet software includes built-in sorting functionality. Programs like Microsoft Excel, Access, SQL-based databases, Google Sheets, and Tracker Ten allow sorting by one or more keys, ascending or descending, and offer additional features like stable sorts and multi-level sorting.

Using database software for sorting provides several advantages:

Multi-column sorting: Sort data based on multiple attributes to create hierarchical orderings.

Integration with filtering: Combine sorting with filters to extract subsets efficiently.

Automated sorting: Set automatic sorting rules for newly added data.

Large dataset handling: Modern software optimizes sorting for large datasets without overloading memory.

Visualization readiness: Sorted data can be directly used in charts, reports, and dashboards.

For a variety of database products with robust sorting features, browse our site to explore options for small to large-scale datasets.

Looking for windows database software? Try Tracker Ten

- PREVIOUS Classifying Sensitive Data in Your Database Friday, September 15, 2023

- NextTracking Jewelry Monday, September 4, 2023